HA, DR, and Scalability use cases

Data replication delivers data to multiple servers for high availability, read scalability, and disaster recovery

Data replication is build into the FairCom server. Parallel processing threads replicate data at high speed while ensuring transaction consistency. Data can be asynchronously or synchronously replicated to one or more nodes. Asynchronous replication is ideal for replicating data across data centers or for high-speed, eventually consistent use cases. Synchronous replication is ideal for implementing clustered nodes for high-availability solutions.

Replication ensures all changes to a table/file are replicated, including the replication of newly created, altered, and deleted tables and files. The data that is replicated in a table/file can be filtered such that it replicates a subset of the table's data.

A browser-based graphical user interface (GUI), called Replication Manager, runs in a central location. It makes it easy to configure, manage, and monitor data replication across hundreds of servers and thousands of tables and files. FairCom Replication can also be automated through a JSON/HTTP web service API and a C/C++ API.

HA, DR, Scalabilty use cases for replication manager

FairCom has certified using its replication technology with Pacemaker on Red Hat Enterprise Linux. You can use Pacemaker to implement a high availability solution with FairCom DB and RTG . Pacemaker detects hardware and software failures and properly fails over the database from a primary computer to a secondary.

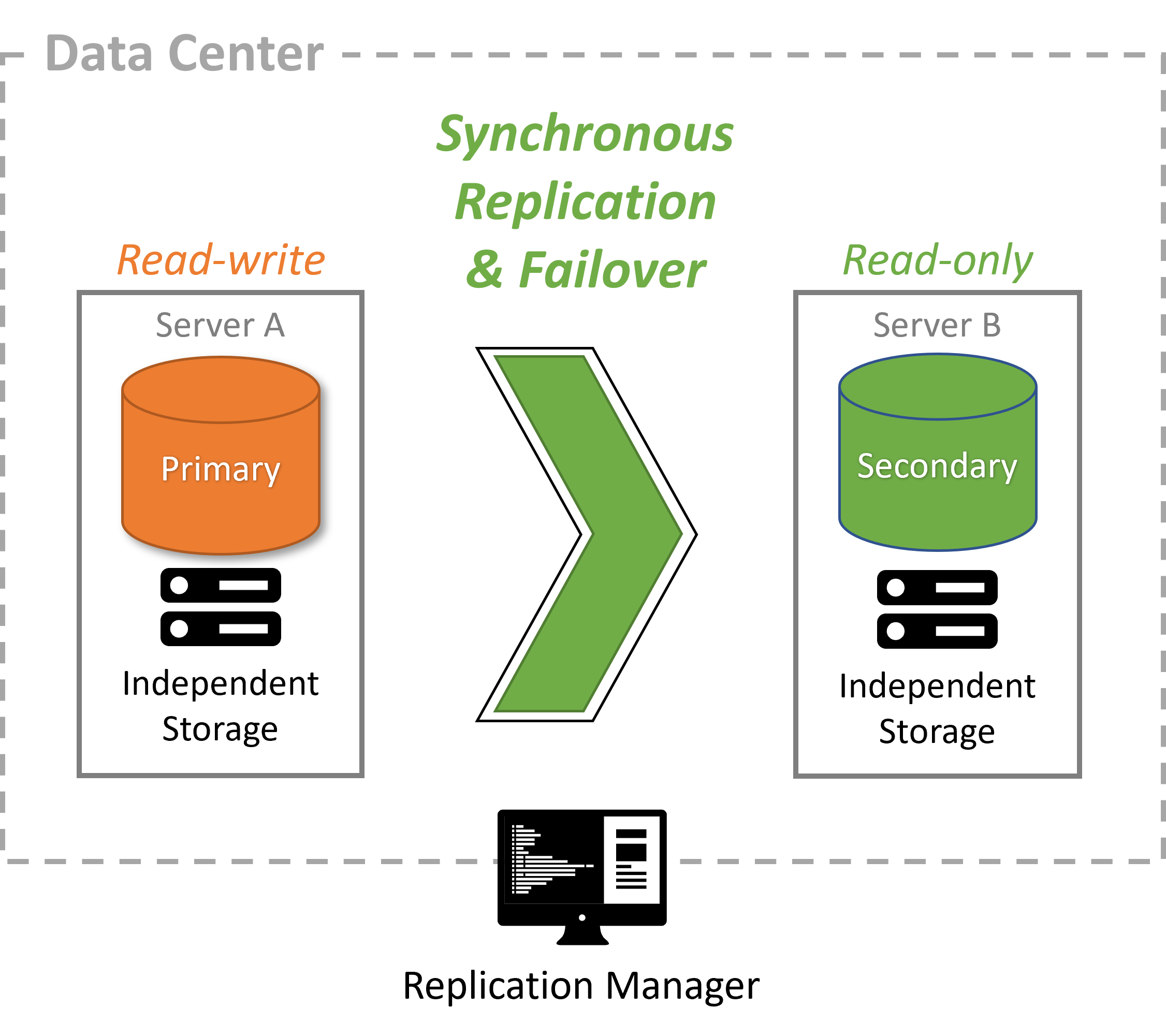

Use case

Ideal for business continuity of multiple, mission-critical applications. It allows you to read and write data on the primary server and read data on the secondary server. The primary and secondary servers have independent storage for faster operations on each server. Failover does not lose data. When the primary server fails, currently running transactions return an error so the application can rerun them. Committed transactions are always on the secondary server for when it becomes primary.

|

Why:

To ensure high availability without data loss during failover.

To use the read-only secondary server for reporting and analytics.

How:

FairCom synchronous replication ensures both database servers always have the same committed data.

Pacemaker provides a virtual IP address that it controls to ensure applications can connect only to one database server at a time.

Pacemaker determines when to fail over by detecting hardware or software failure.

Pacemaker prevents a failed server from being accessed by clients, and, thus, prevents the possibility of inconsistent data.

Pacemaker notifies the secondary FairCom server of a failover. It applies all pending commit logs.

FairCom secondary server notifies all client applications of the failover event so they can reconnect to it.

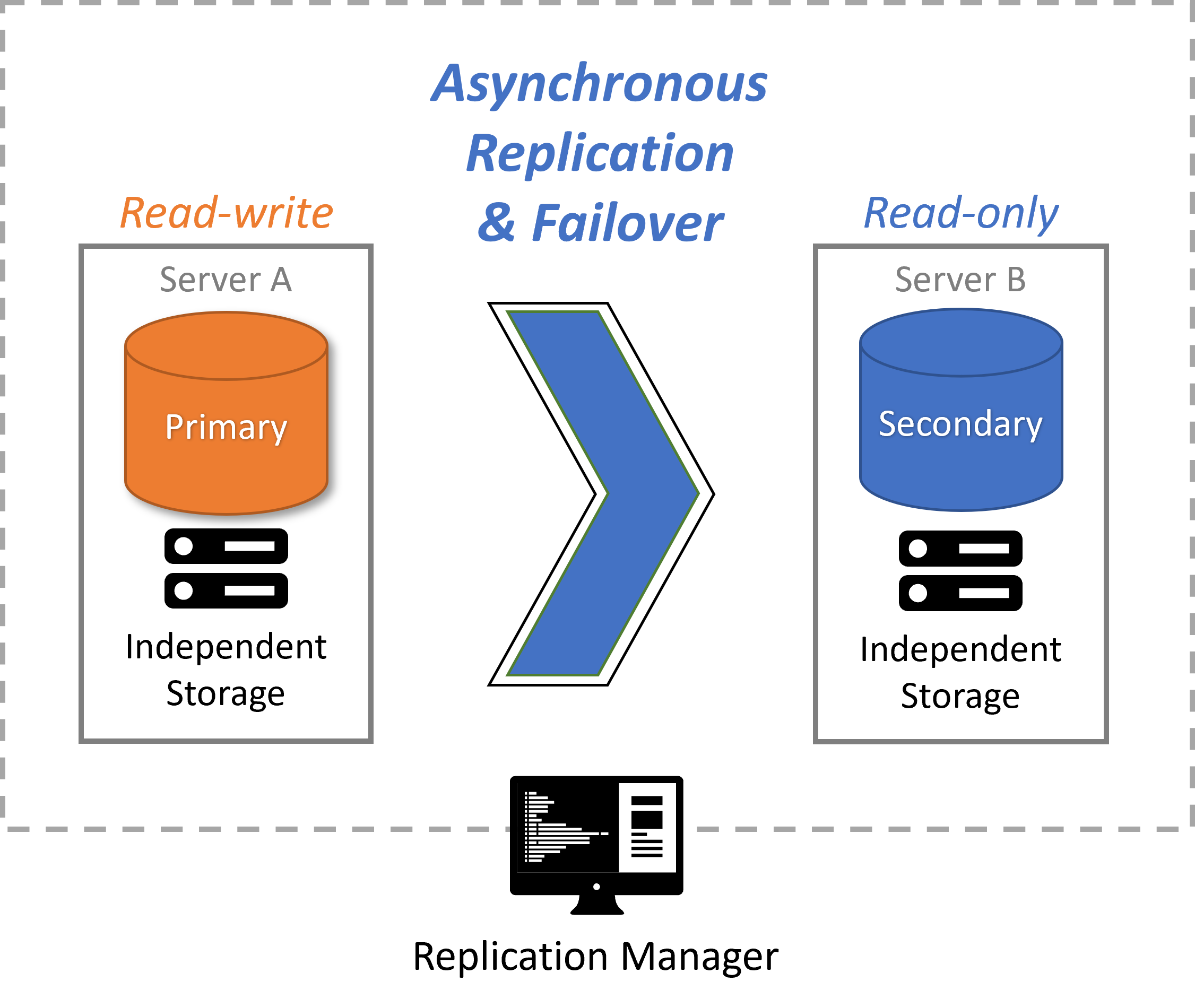

FairCom Replication makes it easy to create a read-only copy of your data on a secondary server with no performance impact to the primary server. The data in the read-only copy is slightly behind the primary server because of network latency, but it is always transactionally consistent at any point in time.

|

Why:

Scale an application by running reports and analytics on additional servers without impacting online transactional operations on the primary server.

Create a live, read-only copy of your data for quick recovery.

Replicate data to a geographically distant region for centralized data warehousing, reporting, analytics, machine learning, etc.

How:

Use asynchronous replication to replicate an entire database -- or a subset of it -- to another server.

Create copies of your data on multiple secondary servers without impacting the performance of the primary server.

|

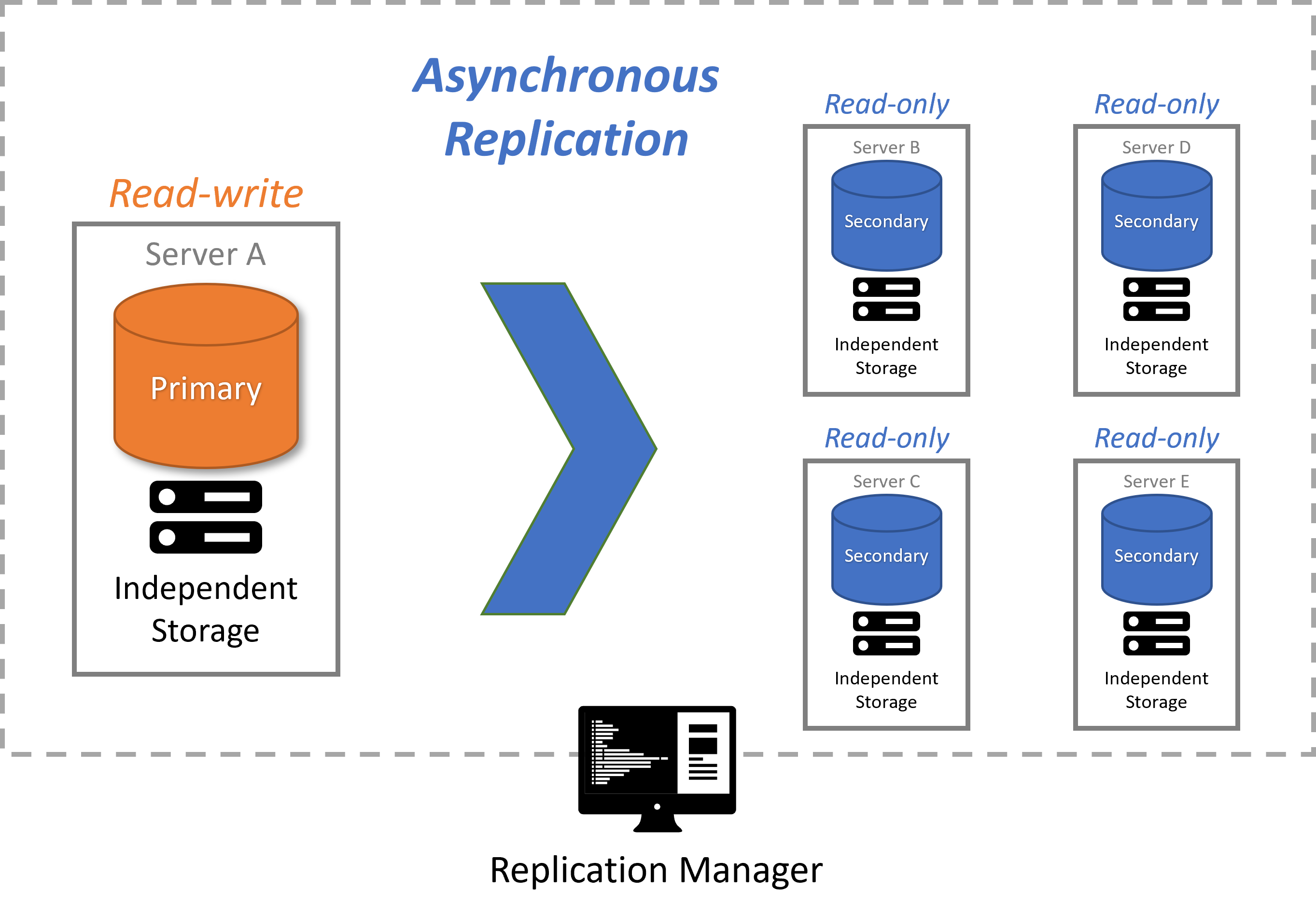

Why:

Scale read-only operations across many servers.

How:

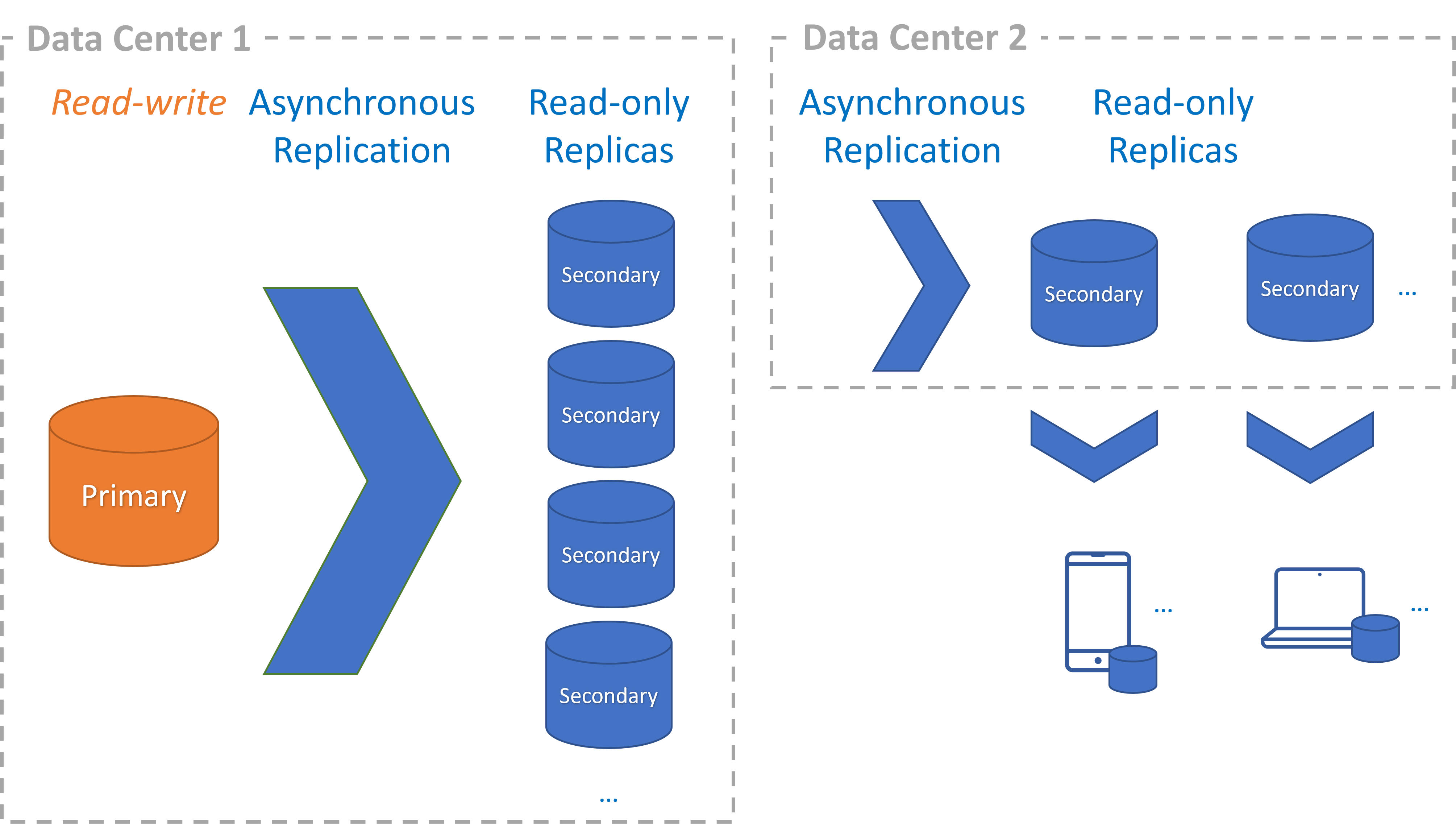

Use asynchronous replication to replicate entire databases to many other servers in the same data center.

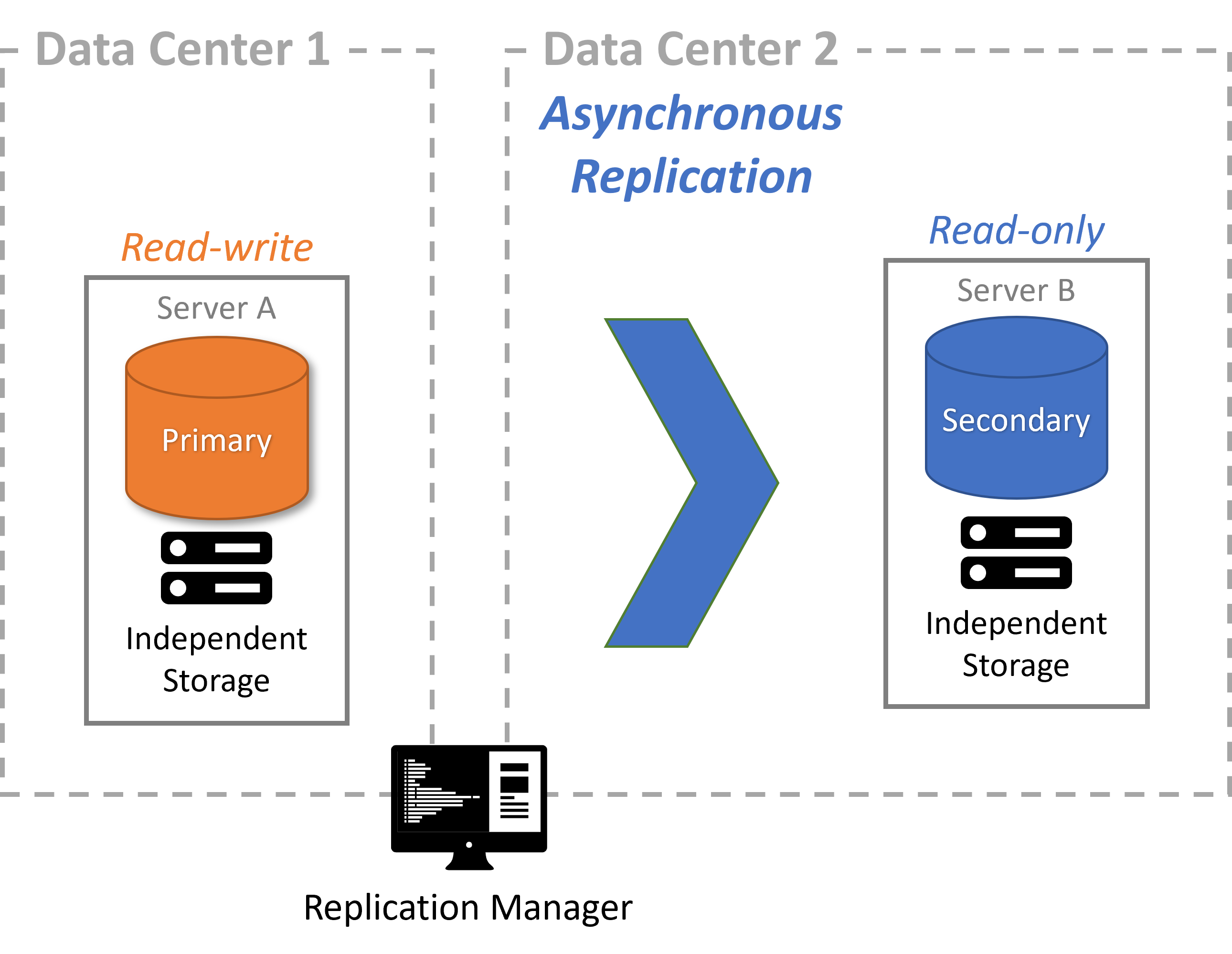

Continuously preserve an up-to-date copy of your data on remote servers for disaster recovery (DR). The data in the DR server is slightly is slightly behind the primary server because of network latency, but it is always transactionally consistent at any point in time.

|

Why:

Survive a disaster by preserving a live copy of your database in a geographically distant region.

Quickly start up applications in the geographically distant region after a disaster because databases are always running with live, up-to-date data.

How:

Use asynchronous replication to replicate your entire database to one or more servers in one or more geographically disperse data centers.

Manually change the read-only replicas to become read-write primary servers when the primary data center is hit by a disaster.

Replicate an entire database or subsets of it across many remote servers and clients, horizontally scaling the data to support very large numbers of read-only users.

|

Why:

Scale read-only transactions across data centers, desktop apps, and mobile apps.

How:

Use asynchronous replication to replicate the entire database to many other computers

Chain asynchronous replication processes to achieve massive scale. For example, replicate one server to twenty and then replicate each of those twenty to twenty more, and so forth. Doing this six times replicates data to 64 million computers.

Replicate subsets of data to each computer to minimize computer and network resources. For example, replicate only a user's working set of data to their mobile phone for quick, local, read-only access.

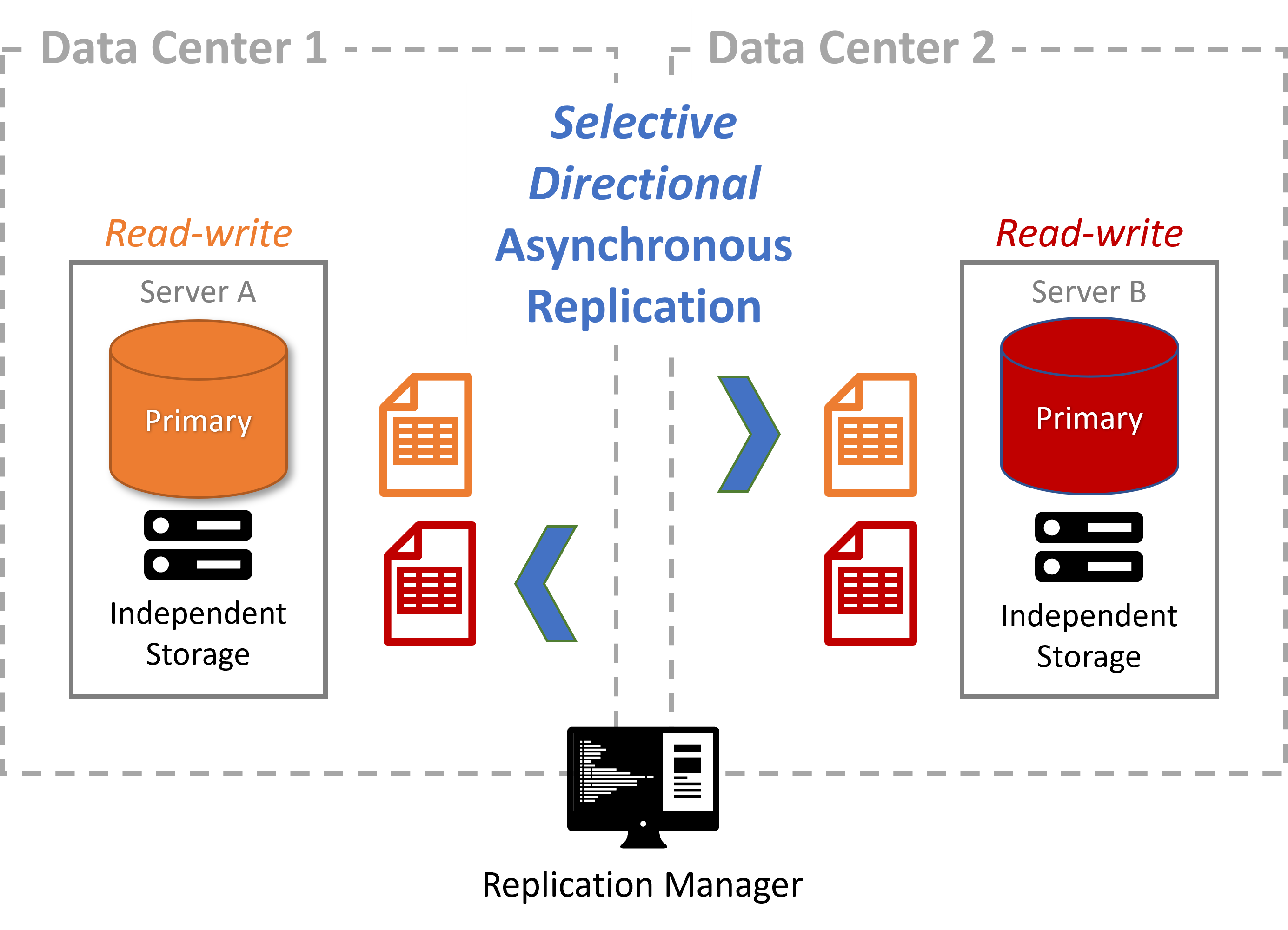

Selectively replicate shared data within and across data centers for globally distributed applications.

|

Why:

Make all changes to master data and lookup data in one or more centralized databases.

Instantly replicate these changes across globally distributed applications to ensure they all use the same master and lookup data.

How:

Use selective asynchronous replication to replicate specific tables or subsets of data in specific tables from one to many servers.

Selectively limit which data is replicated across data centers for regulatory compliance.

|

Why:

Government regulations require specific data to remain in country while requiring other data to be replicated across multiple countries.

How:

Use selective asynchronous replication to replicate specific data in specific tables from one to many servers within and across data centers.

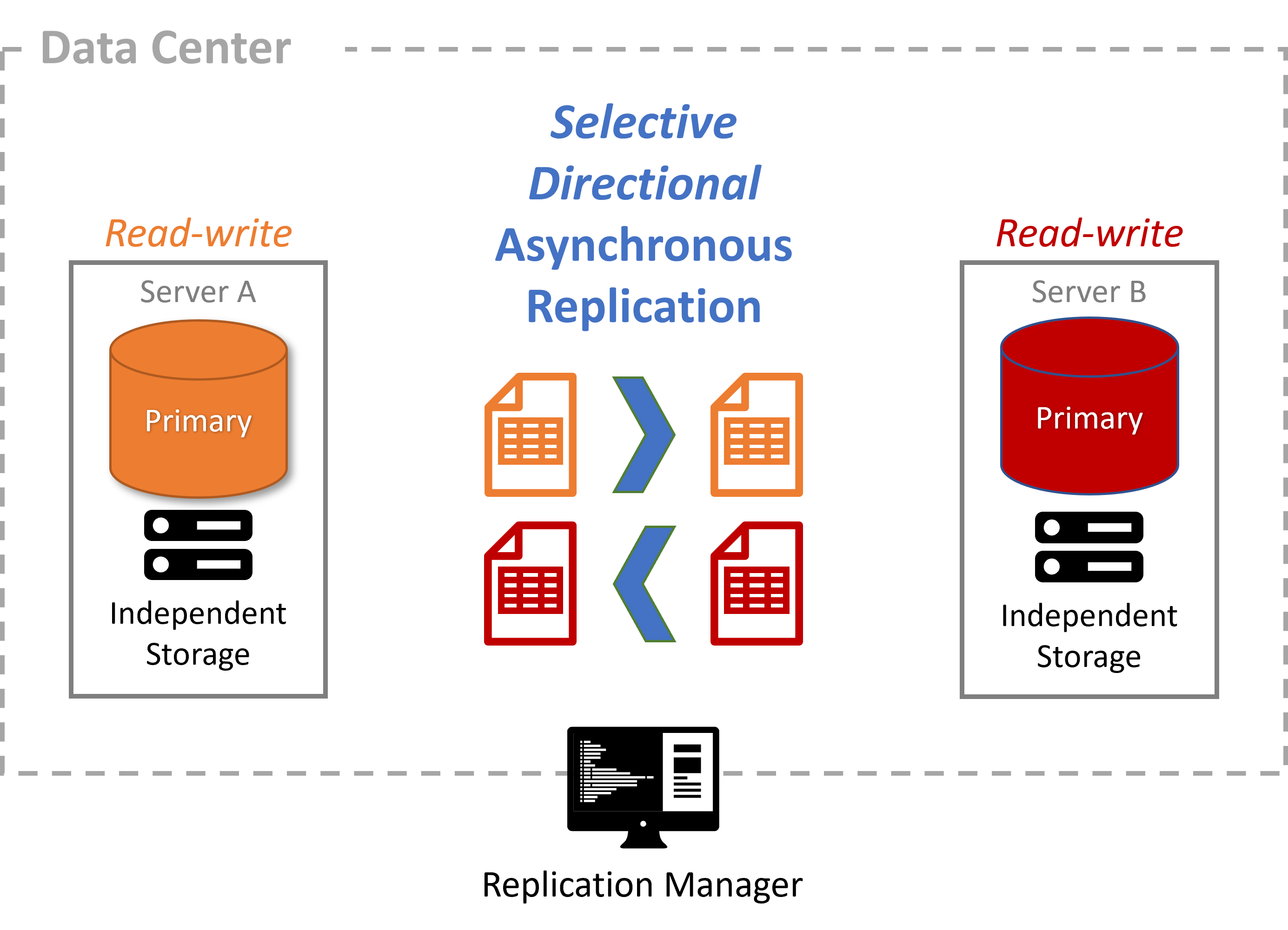

Avoid slow joins across microservices by providing each microservice with its own data.

|

Why:

Each microservice has its own dedicated database for independent upgrades.

Each microservice needs selective master, lookup, and transactional data from other microservices because joins across microservices are slow.

How:

Selectively replicate data across microservice databases.

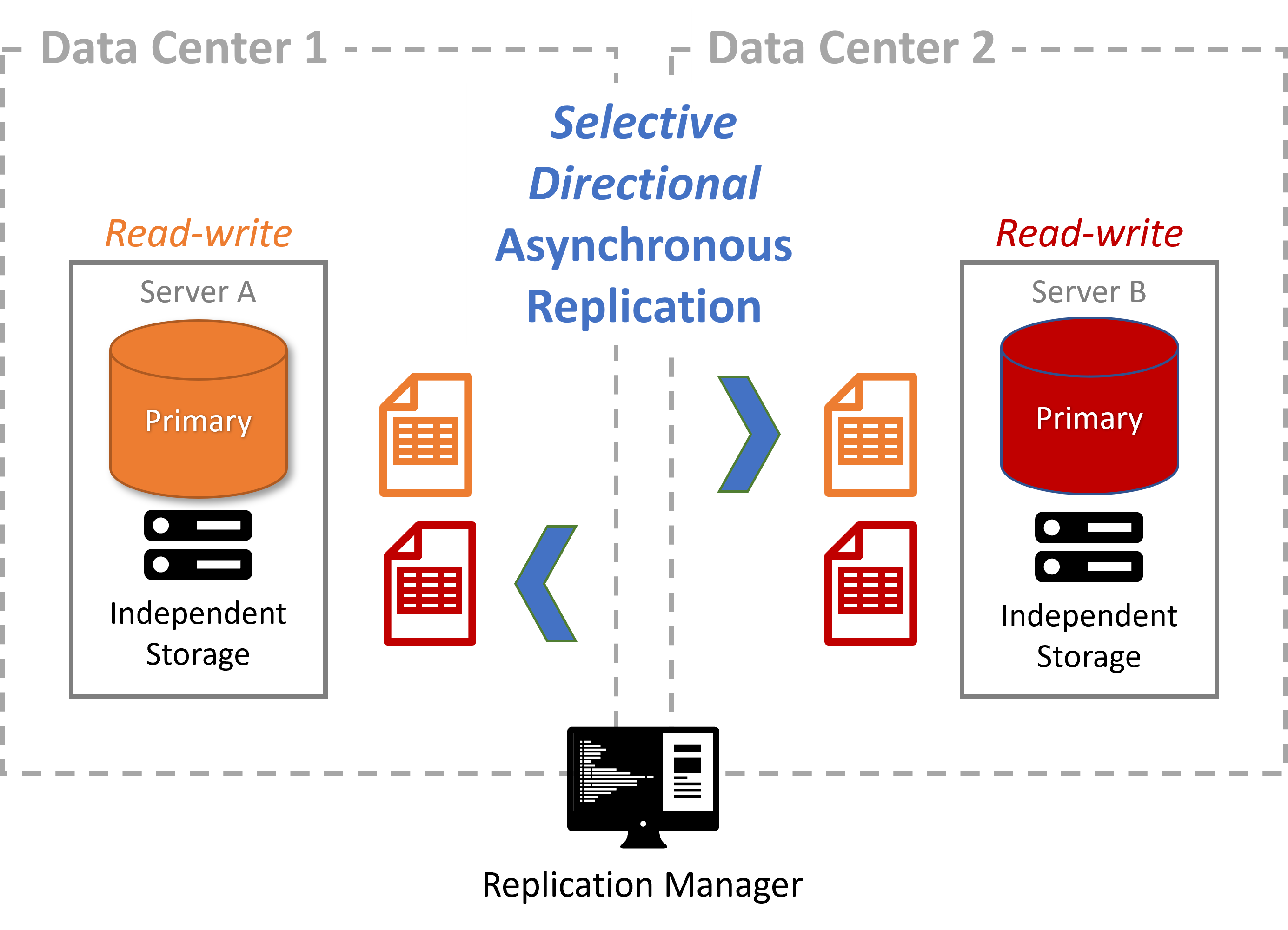

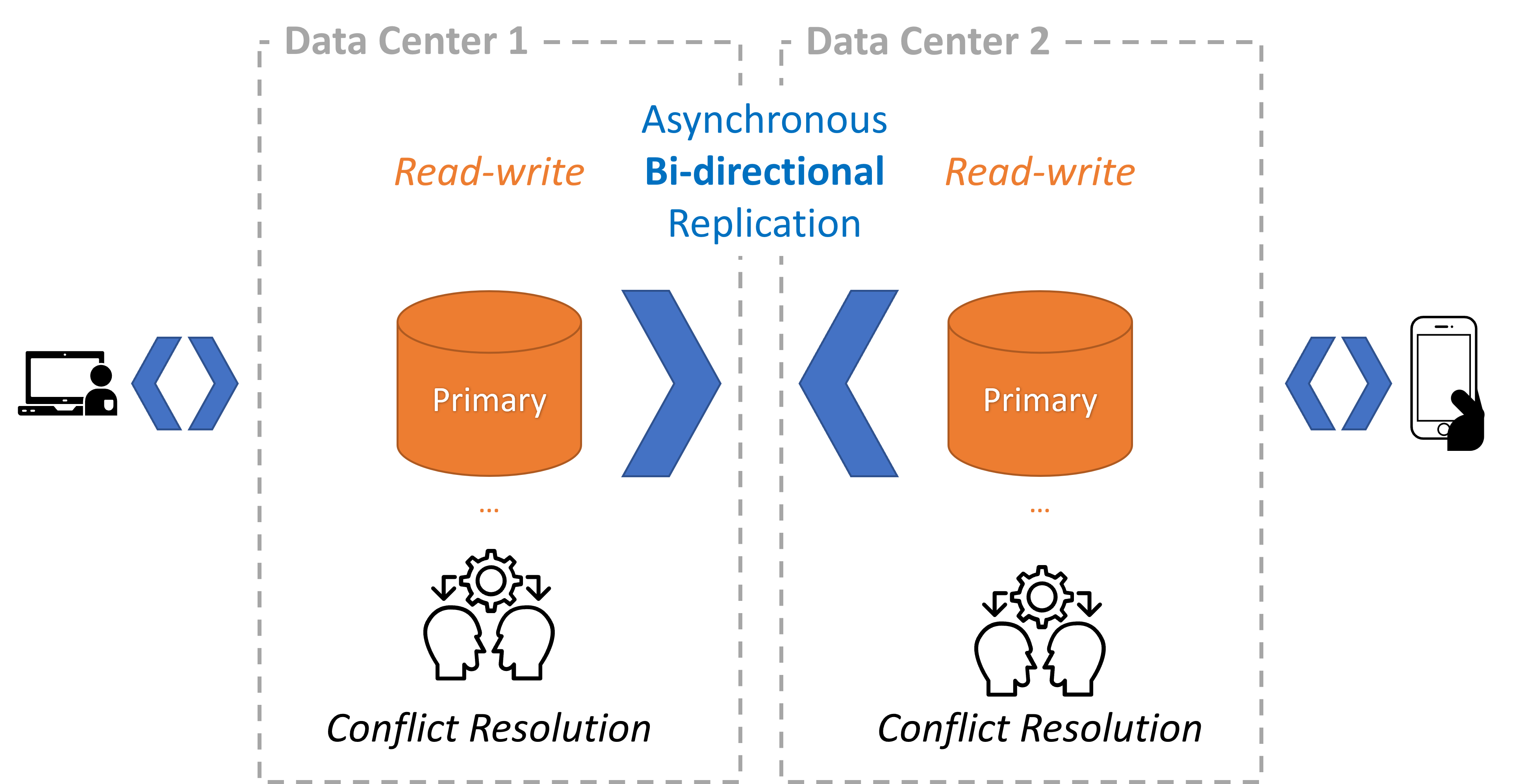

Allow users to read and write data using data centers close to them for maximal availability and performance.

|

Why:

Allow users to read and write data to their closest data center for the following reasons:

Increases application performance because it minimizes network latency.

increases availability because it allows users to access other data centers for reads and writes when the closest data center is unavailable.

How:

Use bidirectional asynchronous replication to replicate all data changes between servers.

Use conflict resolution callbacks to deal with data conflicts caused by simultaneous writes to the same data.

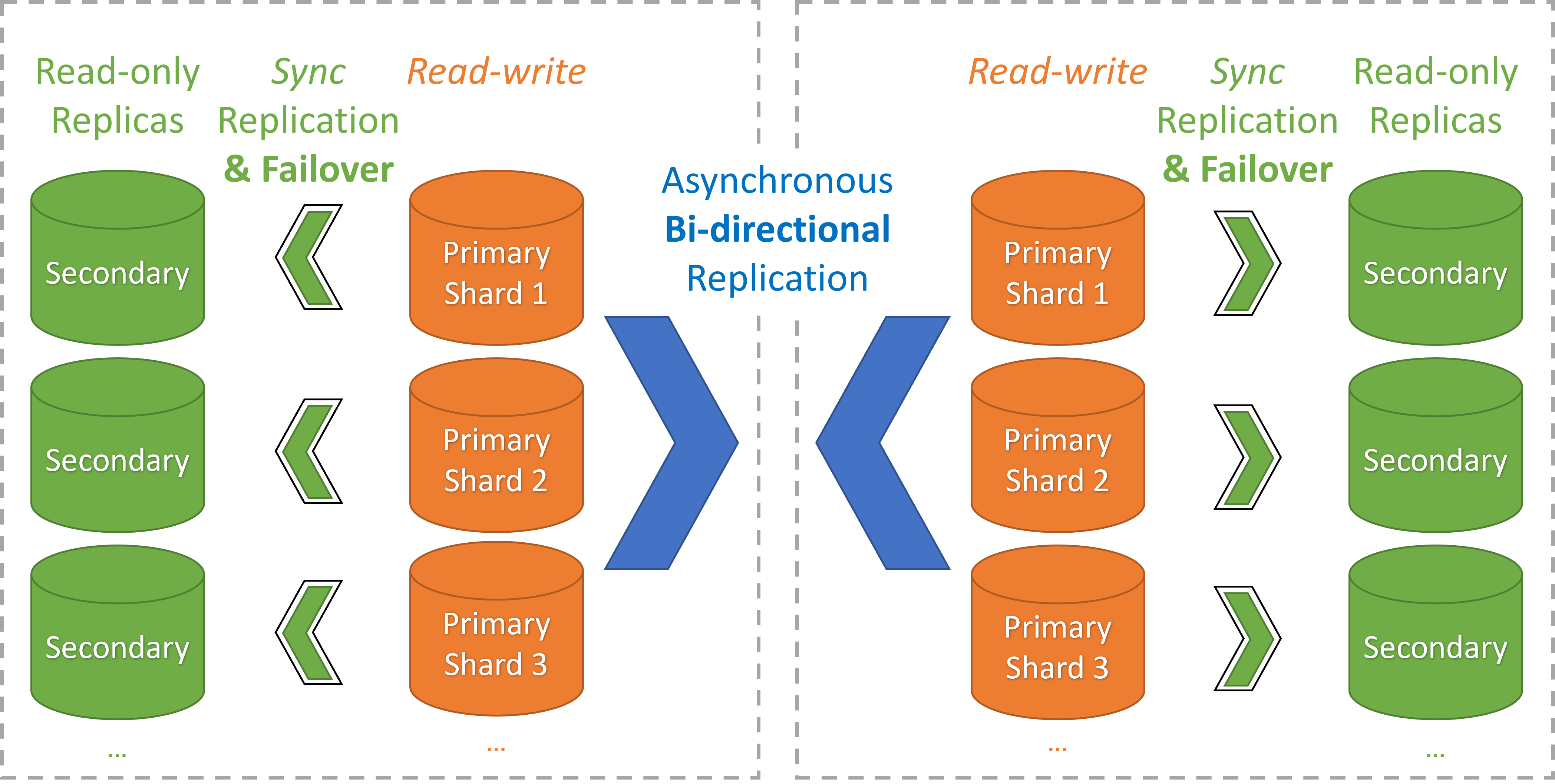

Allow users to read and write data using data centers close to them for maximal availability and performance. Shard data for horizontal scalability. Lastly, provide high availability failover for each shard.

|

Why:

Allow users to read and write data to their closest data center:

Increases application performance because it minimizes network latency.

Increases availability because it allows users to access other data centers for reads and writes when the closest data center is unavailable.

Shard data across multiple servers to scale the application horizontally.

Provide high availability for each shard to minimize downtime, increase data durability, and scale reads.

How:

Use bidirectional asynchronous replication to replicate data changes of each shard across data centers.

Use conflict resolution callbacks to deal with any data conflicts caused by simultaneous writes to the same data.

Use synchronous replication to replicate each shard in each data center to a secondary server.

When a primary shard fails, use automatic database failover to fail over to its secondary shard.

Store the shard data boundaries in a table and replicate that table across all data centers.

Use the FairCom Replication API in the application, which makes it easy to connect simultaneously to all shard database servers. Its remote-control ability makes it easy and fast to process data in all the shard database servers as if they were a single database. It makes it easy to use the shard data boundaries table to determine which shard holds the desired data and simply switch to it before processing its data.