Linux Pacemaker

Build a Highly Available Cluster on Linux for FairCom DB

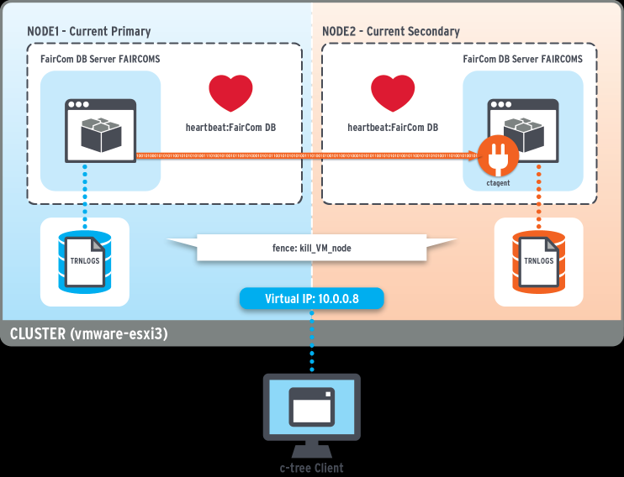

This section describes how to create a two-node, highly available (HA) solution running FairCom DB on a clustered Red Hat Enterprise Linux (RHEL) environment. The two nodes are a primary and a secondary server, referred to in RHEL as a master and slave respectively. The HA solution uses the FairCom synchronous replication feature to ensure that the data on both FairCom DB servers is always identical.

Database clients are connected to a virtual IP address to listen for client connections regardless of which server nodes in the cluster are actually running database services.

Provide a virtual IP address (VIP).

Detect failures in the hardware, OS, and database.

Fail over from the primary node to the secondary node.

Fence off the primary server during a failover event to prevent scenarios that can corrupt data such as split-brain

Data is synchronized between the two database servers using FairCom replication. It replicates all data changes from the primary server to the secondary server. It uses synchronous replication to ensure data is always the same on both servers. You also have the option to use the slightly faster asynchronous replication, but this introduces a chance that data will be lost during a failover. All data changes go to the primary server. Read-only operations can go to either the primary or secondary server.

Failures are detected by Pacemaker. Pacemaker monitors the primary and secondary servers. It also monitors the replication agent on the secondary FairCom DB server. When it detects a failure of the current primary server it fences off the primary database from all communications and promotes the secondary database server to become the new primary (likewise, secondary FairCom DB server failures are also detected further preventing data integrity issues). FairCom DB resource agents define and configure this process.

FairCom DB replication for availability

To ensure all data is the same on both node one and node two, we use FairCom DB replication with synchronous commit. When a transaction on the primary server is committed, it guarantees that the secondary server has access to the log data from the primary server.

When in synchronous mode, changes applied to the secondary server are typically faster because the secondary server is read-only. If there is a heavy read load on the secondary server, changes may be applied slightly slower. This does not affect high availability because all transactions are always persisted on disk on both servers before the database returns a commit confirmation to the application.

Build a highly available cluster on Linux for FairCom DB

Cluster

A Cluster is a collection of nodes and resources (usually services) managed as a set for high availability.

Pacemaker

Pacemaker is a high-availability cluster resource manager based on the Open Cluster Framework (OCF). Pacemaker provides detection of and recovery from node-level and service-level failures. Faulty nodes can be fenced ensuring data integrity. Pacemaker configurations define clusters, nodes, resources, alerts, and STONITH fencing agents.

Pacemaker cluster management is included with many major Linux distributions. It is a cluster resource manager and executes as a daemon called CRMd on each node. All cluster changes are routed through it, such as node instantiations, moves, starts, stops, and information queries. Pacemaker can script and cluster any resource, such as FairCom DB.

Pacemaker uses quorum voting (votequorum service) to determine when a cluster node should be fenced or isolated to prevent split-brain phenomena. More than 50% of nodes must agree on cluster operations. If more than 50% of the nodes in a cluster are offline, clustered services are stopped. Pacemaker utilizes Corosync as its high-availability framework and a specific Corosync option provides for two node clusters where only one node is used as a quorum.

Corosync

Corosync is a distributed messaging and quorum platform for cluster-aware components. Cluster communications go through the Corosync daemon utilizing an in-memory database. It manages cluster membership and messaging communications as well as quorum rules, and cluster state transfer between nodes. Corosync uses the kronosnet library.

Node

A Node is an RHEL host participating in the cluster. Each cluster node runs a local resource manager daemon (LRMd) which is the interface between CRMd and local cluster resources on the node.

One node in the cluster is the Designated Coordinator (DC) which stores and distributes cluster state to all cluster nodes. This cluster state is maintained in the Cluster Information Base (CIB).

A cluster resource is an application or data managed by the cluster — for example, a database instance running in an RHEL cluster can be configured to be a cluster resource. In this definition, FairCom DB is defined as a resource that requires cluster management to run its services in a highly available configuration.

Resource agent

Resource agent is an abstraction that allows Pacemaker to manage services it knows nothing about. This abstraction takes the form of an executable script that contains logic for starting, stopping, and checking the health of a defined resource such as a database, or other service provided by the cluster.

Fencing agents

Fencing agents regularly check the status of a resource and, if Pacemaker determines that a resource has failed, one or more agents ensure the resource stays down. Fencing agents implement Shoot The Other Node In The Head (STONITH) which is usually targeted to the hardware layer ensuring the complete removal of a node from the cluster.

You choose the method that best suits your requirements and hardware capabilities.

system reset

powering off the host

deactivating a VM

removing network access

Fencing

Fencing is an important component of any successful clustering as it ensures that a failed node is unable to run or provide any services at all. This prevents scenarios such as split brain which, in the case of a database, allows data to be written to both servers in the cluster. This incorrectly puts some data on one server and some data on the other causing the breaking of data integrity, errors, and making it very difficult to recover correct data.

Groups

Groups are created to manage multiple cluster resources as a unit.

Location

Start order

Reverse stop order of multiple resources that need to function as one unit

Install Pacemaker (from the

rhel-8-for-x86_64-highavailability-rpmspackage) using the following command.sudo dnf config-manager --enable rhel-8-for-x86_64-highavailability-rpms

If configuring a shared storage cluster, also install the

resilientstoragepackage.sudo dnf config-manager --enable rhel-8-for-x86_64-resilientstorage-rpms

Install the Pacemaker fencing agents using the following command.

sudo dnf install pcs pacemaker fence-agents-all

Verify that Pacemaker was installed using the following command.

sudo pcs -h

This procedure describes how to initialize two FairCom DB synchronous replication nodes as VMware virtual machines running identical RedHat 8 OS environments configured with Pacemaker clustering software.

Node one

This is considered the active/hot node. This instance of FairCom DB is a read-write server. The active node is the only FairCom DB server that should process changes to data.

Node two

This is considered the passive/warm node. This instance of FairCom DB is a read-only server. It could be used for query reporting and analytics. This FairCom DB instance should never allow changes to data.

Ensure that the Pacemaker RedHad high-availability add-on component is installed.

Ensure your user account is a part of the wheel group or consult with your local system administrator for appropriate access.

Notice

VMware is used later in this example only to provide a fencing agent. VMware is not required, and other fencing agents may be used.

Note

Unless otherwise mentioned, each of the steps in this procedure must be performed on all nodes in the cluster. Sudo access is required for the majority of the steps.

Configure and load required network ports for high-availability components using the following command.

sudo firewall-cmd --permanent --add-service=high-availability sudo firewall-cmd --reload

Assign the

haclusteruser password using the following command.Note

The high-availability installed packages create a default cluster user (

hacluster) used to run and isolate its services. This default user is used to connect across nodes and requires a secure password.sudo passwd hacluster

Enable and start the PCSD service on each node using the following command.

Note

systemctl enableautomatically starts the service on system boot.sudo systemctl enable pcsd.service sudo systemctl start pcsd.service

Identical FairCom DB packages are installed into /opt/faircomdb. This example assumes the system user "faircom" extracts the package and runs the server process, though any system user name could be used.This standardized location is appropriate for external software packages. Symlinks are created in /usr/local/bin/ for important utilities used by the pacemaker resource scripts such that they are available in default paths. Firewalls must be configured to allow required database ports on all nodes.

Configure firewalls to allow required database ports on all nodes.

Note

Port 5597 is the FairCom DB default ISAM connection port (also known by the server name

FAIRCOMS.Port 6597 is the FairCom DB SQL connection port.

Port 8443 is the default web apps port.

sudo firewall-cmd --zone=public --permanent --add-port=5597/tcp sudo firewall-cmd --zone=public --permanent --add-port=6597/tcp sudo firewall-cmd --zone=public --permanent --add-port=8443/tcp sudo firewall-cmd --reload

Create

symlinksin/usr/local/binusing one of the following commands.See repadm utility, and ctsmon utility

sudo ln -s /opt/faircomdb/config/failover.linux.pacemaker/getsyncstate /usr/local/bin/getsyncstate sudo ln -s /opt/faircomdb/config/failover.linux.pacemaker/setsyncstate /usr/local/bin/setsyncstate sudo ln -s /opt/faircomdb/tools/repadm /usr/local/bin/repadm sudo ln -s /opt/faircomdb/tools/ctsmon /usr/local/bin/ctsmon

Ensure that permissions on the utilities are set correctly by using one of the following commands.

sudo chmod 755 /opt/faircomdb/config/failover.linux.pacemaker/getsyncstate sudo chmod 755 /opt/faircomdb/config/failover.linux.pacemaker/setsyncstate sudo chmod 755 /opt/faircomdb/tools/ctsmon sudo chmod 755 /opt/faircomdb/tools/repadm

Ensure all files and components are owned and readable by the proper OS user.

Important

The user ID (faircom) under which the server process runs must be added to the

haclientgroup such that it can run thecrm_attributeutility to read and write theclusterattribute.sudo usermod -a -G haclient faircomNote

If not using the default user ID (faircom), you must specify the OS user for the

user=owner_nameparameter when running thepcs resource create faircomdbcommand. You will see references to this username in the FairCom DB resource scripts.

Required FairCom DB files

The files in this section are required to configure the server and its embedded replication agent to run in a pacemaker cluster.

FairCom DB files in the server binary directory

/opt/faircomdb/server/:server executable:

faircomctsrvr(alternate legacy server binary file name)ctreesql(alternate legacy server binary file name)

server core library -

libctreedbs.soclient library -

libmtclient.soagent library

librcesbasic.solibrcesdpctree.so

server license file -

ctsrvrXxx.lic

Replication agent subdirectory (/opt/faircomdb/server/agent/)

replication agent library -

libctagent.soConfigurations (

/opt/faircomdb/config/):server configuration file -

ctsrvr.cfgagent configuration; specifies name of replication agent configuration file, relative to server working directory -

ctagent.json

Replication (

/opt/faircomdb/config/failover.linux.pacemaker)replication agent configuration file, used when this server is running as a secondary server -

ctreplagent.cfgapplication-specific file filter for replication -

replSync.xmlsettings files used by the replication agent:

source_auth.settarget_auth.set

Required file configuration

Copy the

/opt/faircomdb/config/failover.linux.pacemaker/ctagent.jsonfile to/opt/faircomdb/configEdit the

/opt/faircomdb/config/ctagent.jsonfile to look like the following:{ "managed": false, "configurationFileList": [ "../config/ctreplagent.cfg" ] }Copy the

/opt/faircomdb/config/failover.linux.pacemaker/ctreplagent.cfgfile to/opt/faircomdb/config.Note

This file will need to be modified for your environment (see

ctAgentconfiguration for synchronous commit replication).Copy

/opt/faircomdb/config/failover.linux.pacemaker/replSync.xmlto/opt/faircomdb/config.

ctAgent configuration for synchronous commit replication

It is important to configure replication on both nodes correctly. Once a failover node has recovered, it now becomes the secondary node and the replication direction is now reversed between the nodes. If replication is not correctly configured, the secondary node will not be in sync after recovery.

A cluster with two nodes, node1 and node2. Replication will pull from the primary node1 and apply to the secondary node2. In this example, replication agent config file (ctreplagent.cfg) for node2 is used for initial setup.

; c-tree Replication Agent Configuration File ;file filter must specify the names of files to include/exclude for replication and the purposes, including sync_commit file_filter <../config/replSync.xml ;replication agent unique id; must be same on both systems unique_id agent1 check_update yes replicate_data_definitions yes ;enable synchronous commit processing for replication agent syncagent yes parallel_apply yes num_analyzer_threads 1 num_apply_threads 4 sync_log_writes yes ; Source server connection info source_authfile ../config/replication/source_auth.set source_server FAIRCOMS@node1 source_nodeid 10.0.0.1 ; Target server connection info target_authfile ../config/replication/target_auth.set target_server FAIRCOMS@localhost target_nodeid 10.0.0.2 socket_timeout 5 lock_retry_count 10 lock_retry_sleep 1000 ; Read 8 KB batches from source server batch_size 8192 ; Use a 1-second timeout when reading from source server read_timeout_ms 1000 exception_mode transaction ; The master server will remember the replication agent's log position ; even when the replication agent disconnects. remember_log_pos yes ; Log file name log_file_name ../data/ctreplagent.log

source_server and nodeid on the second nodeThe only difference in the replication agent configuration file between node 1 and node 2 is that the source server name is changed to the other node name and the node IDs are set with the appropriate node ID for the servers.

; Source server connection info source_server FAIRCOMS@node2 source_nodeid 10.0.0.2 ; Target server connection info target_server FAIRCOMS@localhost target_nodeid 10.0.0.1

file_filterThe file_filter option is important because it determines which files are replicated by the replication agent and it enables synchronous commit mode for the files. In this example, two files, mark.dat and admin_test.dat are replicated in synchronous commit mode. See replfilefilter.

<?xml version="1.0" encoding="us-ascii"?>

<replfilefilter version="1" persistent="y">

<file status="include">mark.dat</file>

<file status="include">admin_test.dat</file>

<purpose>create_file</purpose>

<purpose>read_log</purpose>

<purpose>open_file</purpose>

<purpose>sync_commit</purpose>

</replfilefilter>

The source_authfile and target_authfile reference .set files contain encoded authentication information for the replication agent. These have been provided for the default ADMIN user and need to be updated when the password is changed. See ctcmdset - Authentication File Encoding Utility

FairCom DB server configuration

The server configuration file (ctsrvr.cfg) requires additional options specific to participating in a Pacemaker cluster.

;Required keywords for pacemaker-based replication CHECK_CLUSTER_ROLE YES REPL_NODEID 10.0.0.1 ;Optional keywords related to pacemaker replication SECONDARY_STARTUP_TIMEOUT_SEC 20 SYNC_COMMIT_TIMEOUT 10 MAX_REPL_LOGS 100 REPLICATE *.dat

This must be unique for each server. It does not need to be a valid IP address and, in fact, has no relationship to one. However, it is formatted as such. This must match the

target_nodeidspecified inctreplagent.cfgon this machine, and thesource_nodeidfromctreplagent.cfgon the remote machine.CHECK_CLUSTER_ROLE YESThis enables the server’s integration with Pacemaker. When the server starts it checks for the file

cluster.iniin the server’s data directory. The start function in the Pacemaker script forfaircomdbwrites tocluster.inito signal the server of its role as a primary server.DEAD_CLIENT_INTERVAL <seconds>The client OS sends a TCP termination signal when a connection is terminated or the client process crashes. Sometimes there are OS, hardware, or network-level issues that prevent this. The server OS cannot distinguish this condition from an idle connection and will continue to consume server resources for the terminated client. Beginning with V13.0.1, the DEAD_CLIENT_INTERVAL option configures a TCP probe on idle connections at this interval. If the client fails to acknowledge the probe, the connection will be dropped after approximately 10 additional seconds.

This indicates to attempt to enable the replication attribute on files matching

<filename>opened by the server. MultipleREPLICATEentries may be used to list files individually. Ifctreplagent.cfgspecifies afile_filter,REPLICATEmay be redundant. Files with the replication attribute must still be included within a replication file filter used by a particular agent for changes to the file to be replicated by that agent when the agent uses afile_filter.MAX_REPL_LOGS <max_logs>Specifies a limit to the number of transaction logs that will be kept by a primary server for replication agents. When a secondary database is offline it is not receiving transaction log data, which must be persisted on the primary until the secondary can reconnect and automatically synchronize itself from these logs. This keyword serves as a safety check to prevent disk space from being exhausted on the primary due to a slow or offline replication agent. If the limit is hit, all secondary must be manually resynchronized from the primary. When configuring this, consider the available disk space, the typical size of transaction logs, and how quickly the application generally fills transaction logs.

SECONDARY_STARTUP_TIMEOUT_SEC <time_in_seconds>This configuration option sets the time limit after which the server stops waiting for a notification of its role when starting up. If the server was a secondary server the last time it ran and within this time limit it does not receive a notification of its role from pacemaker and cannot connect to the partner server, then it stops waiting and completes its startup acting as a secondary server in the cluster. This option defaults to

20seconds.SYNC_COMMIT_TIMEOUT <time_in_seconds>Important

This configuration option sets a time limit in seconds for which a transaction commit waits for the transaction log data to be copied to the secondary system. If a transaction commit operation times out waiting for its log data to be copied to the secondary server, the server switches into asynchronous replication mode and the transaction commit proceeds. This option defaults to 60 seconds, which is probably much too large for most environments. The switch to asynchronous mode indicates that the secondary is out of sync with the primary, so this keyword controls a hard tradeoff between redundancy and performance since the application will encounter this timeout as a delay. Reliable and fast network connections between nodes should be able to set this to smaller values, but the size of transactions must be considered as well. This value must be smaller than any client socket timeouts set with

ctSetCommProtocolOption(ctCOMMOPT_SOCKET_WAIT_INTERVAL)to avoid clients timing out if the secondary server is stopped. It must also be smaller than thefaircomdbresource agent monitor timeout to avoid the primary appearing to hang when the secondary server is stopped causing Pacemaker to then demote and stop the primary!

Server scripts

The server sets a global cluster attribute name promotestate to indicate which is the current primary server node and whether it is running in synchronous or asynchronous replication mode.

The server runs the getsyncstate shell script to read the cluster attribute and the setsyncstate shell script to set its value.

Ensure that these scripts have been put into a directory that is included in the server’s PATH environment variable and is readable and executable by the user ID under which the server process is run.

The FairCom server runs setsyncstate automatically when configured to work under Pacemaker. When the primary server calls the setsyncstate script it sets its replication mode to synchronous or asynchronous using the following options:

“setsyncstate y”sets the server to synchronous mode“setsyncstate n”sets the server to asynchronous mode“setsyncstate c”checks to see if the script can be properly run

If the FairCom server sets the replication mode to asynchronous the cluster is at risk in case of failure.

To notify the administrator of changes to the replication mode, edit the setsyncstate script to use a specified notification method. The commented lines (#) in the script show where to insert notification methods. Use the echo command to send notifications to a file in the tmp directory. To specify another notification method, such as sending an email or running a Python script, use any command that can be run from a shell.

setsyncstate script:

#!/bin/bash

case $1 in

"y")

crm_attribute -G --name promotestate --update "{ \"node\":\"$(hostname)\", \"sync\":\"y\" }"

rc=$?

# You can add a notification that the server is entering synchronous mode here.

# For example:

# echo "`date` Node $(hostname) entered synchronous mode." >> /tmp/faircomdbSyncState.log

echo rc=$rc

exit $rc

;;

"n")

if [ "$2" == "" ]; then

crm_attribute -G --name promotestate --update "{ \"node\":\"$(hostname)\", \"sync\":\"n\" }"

else

crm_attribute -G --name promotestate --update "{ \"node\":\"$(hostname)\", \"sync\":\"n\", \"logposition\":\"$2\" }"

fi

rc=$?

# You can add a notification that the server is entering asynchronous mode here.

# For example:

# echo "`date` Node $(hostname) entered asynchronous mode." >> /tmp/faircomdbSyncState.log

echo rc=$rc

exit $rc

;;

"c")

# option c checks that the script can be executed

echo rc=0

exit 0

;;

*)

echo error: must specify y, n, or c

rc=1

echo rc=$rc

exit $rc

;;

esac

The procedures in this section describe how to add resources to the Pacemaker clustering monitor.

Node availability

FairCom DB server availability

Cluster IP availability

The pcs command controls and configures Corosync and Pacemaker through an interface to the Cluster Information Base, cib file. It provides an extensive command syntax for control over all aspects of your clustered environment. Help is easily obtained directly from this utility.

sudo pcs --help

This section provides the commands to run in order to define the cluster. In all of these commands, replace node1 and node2 with your specific configured machine URLs.

Authenticate local

pcsd(thepcsdaemon) to remote host nodepcsdservices using the following command. When prompted, enter the defaulthaclusterusername and password that were previously assigned, to authenticate Pacemaker against other nodes in the cluster.Note

This only needs to be executed once on one of the nodes.

sudo pcs host auth node1 node2

Assign nodes to cluster and start to secure all

pcschanges into the CIB as you make configurations using the following command.sudo pcs cluster setup my_cluster --start node1 node2

Create the CIB for analysis and comparison using the following command.

sudo pcs cluster cib mycluster.cib

Explore

mycluster.cib, which is an XML file thatpcswrites to the local folder.Enable a cluster to boot when a node is booted using the following command.

sudo pcs cluster enable --all

Allow Pacemaker to run with only 2 nodes.

sudo pcs property set no-quorum-policy=ignore

Set preference for no migrations during normal operations.

sudo pcs resource defaults update resource-stickiness=INFINITY

Failover on first error

sudo pcs resource defaults update migration-threshold=1

Allow a failed resource to resume being the master after 60 seconds

sudo pcs resource defaults update failure-timeout=60

Client applications connect to a cluster floating virtual IP (VIP) (for example, 10.0.1.100) directed to the active node by the cluster management. This VIP is a resource agent, called the Cluster Virtual IP Resource Agent.

A VIP is switched between nodes on a failover event detected by the cluster. It provides a seamless connection experience for client applications.

Define a cluster IP that is monitored for availability to provide a single IP address to the client application.

Note

During a failover event, the client’s connection will be broken. After a failover, a client simply reconnects to the same VIP. The client does not need to know the internal IP addresses of each server.

Modify the IP address in the following command below to match your environment. Consult your network administrator for a suitable static IP address and

netmaskvalue.sudo pcs resource create VirtualIP IPaddr2 ip=cluster.ip.address cidr_netmask=22

For Pacemaker to detect a process failure, it needs to run a heartbeat script periodically. This is a monitoring resource agent. FairCom provides a script that satisfies the basic operational elements required by Pacemaker. These include start, stop, monitor, and validate operations.

The name of the script is faircomdb (also known as the FairCom DB Resource Agent).

Navigate to the default scripts in

/usr/lib/ocf/resource.d/heartbeat/.Copy the

faircomdbresource agent script into the/usr/lib/ocf/resource.d/heartbeat/area.Verify that this script has executable permissions and correct ownership.

ls -l /usr/lib/ocf/resource.d/heartbeat/faircomdb

Should have permissions and ownership like:

-rwxr-xr-x. 1 root root 20982 May 30 2020 /usr/lib/ocf/resource.d/heartbeat/faircomdb

Note

Without correct permissions, Pacemaker will report that the resource could not be installed.

Repeat steps 1 through 3 on the second node.

Display available options and requirements by running the following command.

Note

Multiple configuration options can be provided to this resource agent directly from the command line. Some of these are required.

sudo pcs resource describe ocf:heartbeat:faircomdb

Create your

faircomdbresource. Update the local working directory paths, thefaircomdbprocess owner name, and the passwords to match your environment.Note

These cluster management commands only need to be run on one node of the cluster. As the cluster is now active and enabled, all changes are synchronized across all nodes in the CIB database persisted in

/var/lib/pacemaker/cib/cib.xml.

sudo pcs resource create faircomdb ocf:heartbeat:faircomdb faircomdb_servername=FAIRCOMS faircomdb_adminpass=ADMIN faircomdb_working_directory=/opt/faircomdb/server faircomdb_data_directory=/opt/faircomdb/data -- (Be sure to change your default FairCom DB ADMIN password from the default!) user=SOMEUSER group=SOMEGROUP

Define the FairCom DB Resource Agent (that runs on both nodes simultaneously) as a cloned primary-secondary (master-clone) resource using the following command.

Note

This command also tells the cluster to have only one primary server on the cluster.

sudo pcs resource promotable faircomdb master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true

Enable monitoring for the primary database, checking if it is alive every interval (seconds).

sudo pcs resource op add faircomdb monitor timeout=20 interval=11 role=Master

Add a location constraint to ensure that the VIP starts on the primary node (master) when the cluster is started.

Add an

INFINITYscore to force the colocation node.sudo pcs constraint colocation add VirtualIP with master faircomdb-clone score=INFINITY

With constraints in place, you can define the order in which resources are started. The FairCom DB server resource should be started first, followed by the VIP. Start the VIP when the FairCom DB server starts or is promoted on the primary node using the following command.

sudo pcs constraint order start faircomdb-clone then start VirtualIP sudo pcs constraint order promote faircomdb-clone then start VirtualIP

The cluster calls a fencing agent to kill resources and prevent them from running accidentally. In a FairCom DB cluster, the fencing agent ensures that a failed primary node can no longer receive database connections. After a failover, only the secondary server is allowed to modify data. Failure to do this can result in a split-brain scenario which is very difficult to recover from. Fencing usually involves external disruptions to resources, such as using power supplies to shut down a server, using a network switch to disable network access, shutting down a VM, and so forth. Fencing agents can be found in /usr/sbin/fence_*. For details, see Red Hat Training Chapter 10.

In this example environment, VMWare Virtual Machines (VMs) are used for the hosts. The VMWare Fencing Agent is also used to kill an unresponsive node failover, also known as Shoot The Other Node In The Head (STONITH). It prevents conflicts with mutually exclusive resources.

Fencing should initially be disabled to speed cluster setup, configuration, and testing. It’s been observed that the cluster might not start resources when enabled as default. In production environments, fencing must be configured and enabled to ensure data integrity. For failure modes involving non-responsive nodes (such as network failures, hanging processes, and so forth) fencing is the only method to resolve the problem in a well defined way. However, something as simple as stopping Pacemaker on one node (pcs cluster stop) can make this node non-responsive and trigger its fencing.

This command enables or disables any configured STONITH agent.

sudo pcs property set stonith-enabled= [ false | true ]

In this command, you can update the host map, IP address, login, and password to match your environment. The host map names should match what pcs status lists your existing nodes. Your VMWare host IP address must be reachable within your local DNS if using a URL.

Caution

Exercise caution if accessing your VMWare host using the system root account. It may be preferable to configure a specific administrative account for this purpose. Consult with your local system administrator for more information.

sudo pcs stonith create vmfence fence_vmware_soap pcmk_host_map="cluster1.company.com:CLUSTER-NODE-1; cluster2.company.com:CLUSTER-NODE-2" ipaddr=vmware-host.company.com ssl_insecure=1 login=root passwd=xxxxxx pcmk_monitor_timeout=120s

You may use any fencing components that best fit your environment.

Power supply (UPS specific)

Network devices (switches, routers or NICs)

Server Remote controlled shutdown (Dell iDRAC8, HP iLO, and so forth)

Configure your cluster to start at boot time such that all nodes are available once the boot process is finished.

systemd services for pacemaker and corosyncsudo systemctl enable corosync.service sudo systemctl enable pcsd.service

You can shut down an entire cluster, or just individual nodes. This requires extra attention. Failure to follow the proper sequence will result in the primary node failing over to the secondary node or causing the primary node to restart immediately after you shut it down.

Important

It is the job of the cluster software to keep the nodes in the cluster running at all times, so you must use pcs to shut down resources within the cluster, or the cluster itself.

Shutdown a single node (node1), preventing all resources from running on that node. If node1 is currently the master, failover occurs and resources (such as faircomdb and VirtualIP) will be migrated to node2.

sudo pcs node standby node1

Shut down the entire cluster on any node to stop Pacemaker and the messaging layer using the following command.

sudo pcs cluster stop --all

Force the cluster services to stop in the event that they do not stop running properly on a host using the following command.

sudo pcs cluster kill

Completely remove the cluster environment configuration from all nodes using the following command. The cluster must be created.

sudo pcs cluster destroy --all

Tune the main pacemaker configuration timeouts for various resource agent operations. Load testing and consideration of potential delays caused by expensive operations such as database or system backups can help determine a reasonable set of timeout values for your system.

Important

It is generally better to set the timeout to be too large rather than too small because if the timeout value is too small, a delay due to high system load will lead to a Pacemaker timeout of the relevant operations, which Pacemaker treats as a resource failure, leading to unnecessary resource migration (failover), and possible fencing of the failed node. When the timeout value is much too small it can lead to repeating failures as each node successively times out.

A large timeout causes the detection of some types of failures (such as database process hang, network failure, or node failure) to take the total timeout interval to detect, increasing the total time a resource is unavailable during a failover event.

sudo pcs resource update faircomdb op monitor timeout=120s sudo pcs resource update faircomdb op start timeout=120s

faircomdb op start timeoutConsider both normal startup times and recovery startup times. If typical recovery times are very large such a startup may need to be done manually outside the cluster.

faircomdb op stop timeoutConsider the database shutdown time. If the

stopoperation times out, Pacemaker will fence the node if configured. To avoid this, the resource agent will attempt to kill (SIGKILL) the server process shortly before the timeout expires.faircomdb op promote timeoutA

promoteoccurs at failover. This timeout must account for the replication lag at the time of failover. The replication lag is the time to apply replication changes that are queued pending apply on the secondary server being promoted.Example 8. Log read lagAn estimate of this lag can be monitored with the following command.

repadm -c getstate -s SECONDARY_NAME

Output

timeshows thelogreadlag in seconds.source server: FAIRCOMS@rhel8cn3 target server: FAIRCOMS@localhost --logship-------------------------------------------- sid lognum logpos state seqno error func 23 2 108032586 source 675 0 ctReplReadLogData --logread-------------------------------------------- sid tid lognum logpos state seqno time error func 24 41 2 108032586 source 120284 0 0 ctReplGetNextChange --analyzer-------------------------------------------- # state seqno error func 1 source 9339 0 ctThrdQueueRead --dependency------------------------------------------ state seqno error func source 1547757 0 ctThrdQueueRead --apply----------------------------------------------- # tid lognum logpos state seqno files error func 1 31 2 97494282 source 12015 0 0 ctThrdQueueRead 2 36 2 97499497 source 10600 0 0 ctThrdQueueRead 3 37 2 97507840 source 11218 0 0 ctThrdQueueRead 4 38 2 97477128 source 10507 0 0 ctThrdQueueRead --tranStat-- --analysisQ- ---dependQ-- ---dependG-- ---readyQ--- 0( 0) 0( 0) 0( 0) 0( 0) 0( 0)

faircomdb op monitor timeoutThe

monitoroperation occurs frequently and involves a new database connection. Database operations such as online backups or Quiesce may impose an abnormal delay on new connections. The monitor timeout should allow for these exceptional connection times, otherwise, a failover event will be triggered.Important

IMPORTANT! This must be larger than the servers

SYNC_COMMIT_TIMEOUT <time_in_seconds>configured inctsrvr.cfgor pacemaker will think the primary has failed any time the secondary server is stopped.faircomdb op monitor intervalThe monitor interval determines how frequently database liveness is tested by making a new database connection. If the monitor operation fails or times out a failover will be triggered. A smaller interval will detect failures more quickly but imposes more overhead onto the database.

This procedure describes how to activate clustering.

Start the cluster using the following command.

Note

This command starts all cluster-associated resources on all nodes and begins the monitoring processes as defined in your resource agent scripts. As replication was configured directly in FairCom DB, it is immediately active on startup.

sudo pcs cluster start --all

Check the cluster status using the following command.

# sudo pcs status

Additional FairCom DB resource logging can be enabled by setting

clusterdebug=1in thefaircomdbresource agent script.Check

/opt/faircomdb/server/server.logand/var/log/messages to further verify all cluster database components have successfully started.When you have determined the cluster has successfully started, check that replication is active between database nodes.

Ensure that the cluster node hostname references the specific node where replication is active (the slave node) to ensure the correct active replication node is viewed.

Note

The Example 9, “Query the replication agent's connection state” below assumes

FAIRCOMSis your configured FairCom DB server name.From

pcsstatus, verify which node is the current slave node and substitute that node name.

Example 9. Query the replication agent's connection stateThis example shows the command to examine the replication agent's connection state.

repadm -c getstate -u ADMIN -p ADMIN -s FAIRCOMS@node2

Output

s t lognum logpos state seqno time func n n 0 0 target 2 - INTISAM n n 0 0 source 3 - INTISAM n n 0 0 source 3 - INTISAM

It may be necessary to resync the secondary server from the data on the primary. If a dynamic dump is used, the primary may remain operational while the resync occurs.

Putting the secondary node into standby mode will stop the faircom database and allow a manual resync to occur.

sudo pcs node standby node2

Use dynamic dump to make a point-in-time backup of the primary. This command should be run on the secondary node, and will backup files from the primary node (node1) to the local file

/faircomdb/data/resync.bak. See the FairCom Database Backup Guide for details on constructing a backup script./faircomdb/tools/ctdump -t <backup script name> -c -o /faircomdb/data/resync.bak

Use the dump restore utility to restore the backup to its point-in-time state. The restore script may be identical to the backup script in many cases. The

!DUMPoption must specify the/faircomdb/data/resync.bakfile./faircomdb/tools/ctrdmp <restore script name>

Rename the

ctreplagent.inifile. Thectrdmputility generates actreplagent.inifile that identifies the log position on the source (primary) server that corresponds to the point in time of the restored files. This tells the replication agent where in the log to begin replication. At startup, the agent looks for a file named /faircomdb/data/ctreplagent_<unique id>.iniwhere<unique id>matches the unique_id value fromctreplagent.cfgconverted to uppercase.mv ctreplagent.ini /faircomdb/data/ctreplagent_AGENT1.ini

Restart resources on the secondary node.

sudo pcs node unstandby node2

Once the database and replication agent restart, if the replication agent locates the

ctreplagent_AGENT1.inifile, it should delete this file and log a message toctreplagent.logsimilar to the following:"AGENT1: Logread: INFO: Initial log read position: overriding saved position on target server: using specified position (set in ini file)"

The secondary should now match the primary server data as of the time of the backup, and the replication agent is applying changes that have occurred since then.

If a database error occurs on the secondary server during replication, one record for each operation in the failed transaction is logged to the agent's exception log. The agent will continue running and applying changes, but the secondary server is now out of sync with the primary and will refuse to be promoted to the role of primary server. These exceptions can be viewed with the getlog command.

These past exceptions, if left unresolved, tend to cause additional cascades of errors as those same records are modified on the primary server, leading to large data differences over time.

repadm -c getlog -u ADMIN -p ADMIN -s FAIRCOMS@node2

Once exceptions are resolved on the secondary, the exception records should be deleted from the agent’s exception log (see Example 11, “Purge the exception log”). Only when the exception log has no entries will the secondary server be allowed to promote to the primary server.

This might be valid and useful after a full resync of the secondary data, or if the exceptions were related to files being unintentionally replicated.

Caution

Purging exception log entries without resolving all data differences will lead to the loss of data.

repadm -c purgelog -u ADMIN -p ADMIN -s FAIRCOMS@node2

If a node in a properly operating FairCom DB cluster fails, the remaining node remains available as the primary server in a degraded cluster. As transactions continue to flow, the failed server falls out of sync. If the node is restored to service (now as secondary), it will resume asynchronous replication where it failed (see MAX_REPL_LOGS for limit) and once it has copied the primary log data will automatically re-enter synchronous commit mode and restore the cluster to a normal state.

During this degraded interval, if the single remaining node also fails, the secondary cannot be promoted without some data loss, as it is effectively a backup from an earlier point in time. Normally, the secondary server is expected to remain partially available (read-only) until the primary is restored. In the event of a catastrophic disk failure on the primary, forcing such a promotion may be the best available course of action (see Example 12, “Force a promotion”).

Run the following command on the secondary server you intend to promote:

/usr/local/bin/setsyncstate y

Once your cluster is configured, operational, and ready to service FairCom DB client applications, FairCom DB client applications can connect to the single cluster-assigned virtual IP address without regard to which node may be servicing them.

Ultimately, FairCom DB client applications will need to respond to failover events. ISAM-based applications maintain a tight affinity with their connected server. As such, there is a rich array of context data that can be lost on database failover. Unfortunately, with this database model, this is unavoidable in a shared-nothing architecture such as an OCF cluster.

This example shows multiple failed connection states that can be tested when a client connection is lost. All of these states should be monitored and checked for validity before assuming a database node failover occurred. It is assumed an application must reconnect to the database cluster at this point. Applications must re-initialize any current database activities and context they expected at the time of the last operations as no expectation of the last operational state can be made.

ARQS_ERR 127 "could not send request" ARSP_ERR 128 "could not receive response" ASKY_ERR 133 "server not available" SHUT_ERR 150 "server is shutting down" TRQS_ERR 808 "request timed out" TRSP_ERR 809 "response timed out"

One obvious indicator that a cluster has failed-over is a hanging socket. When the underlying cluster node IP address is switched from the primary node to the secondary node, the existing TCP/IP socket connection becomes invalid and the application receives a network error. When blocking sockets are in use (which is the default behavior), this could lead to long delays in some cases because many systems use OS defaults of 2 hours before detecting a broken socket.

Beginning with V13.0.1, the method to detect this situation is to use TCP-level keepalives to detect non-responsive TCP links. This may be enabled on the client side by calling ctSetCommProtocolOption(ctCOMMOPT_TCP_KEEPALIVE_INTERVAL,""), which causes probes to be sent after the connection is idle for the configured interval (in seconds). If the server fails to respond, the TCP connection will be abandoned after about 10 additional seconds and a faircomDB network error (typically ARQS_ERR/ASRP_ERR) is returned to the application.

If the server is also using TCP keepalives to detect broken links (ctsrvr.cfg option DEAD_CLIENT_INTERVAL), the client interval should be slightly different than the server interval to eliminate duplicate keepalive probes on idle connections. The former method used a socket timeout with a maximum timeout value to wait before returning a timeout error. However, the socket time will also fail a normal API call that takes a long time, such as the rebuild of a very large index, so the timeout may need to be adjusted for some special cases.

FairCom DB allows configuring a socket communication option with ctCOMMOPT_SOCKET_TIMEOUT which sets the global socket timeout value (in seconds).

When ctCOMMOPT_SOCKET_TIMEOUT is set, network socket requests and responses return TRQS_ERR 808 or TRSP_ERR 809 when the specified time has expired while waiting.

ctSetCommProtocolOption(ctCOMMOPT_SOCKET_WAIT_INTERVAL, socketWaitInterval);

When a timeout condition is met, the client can simply reconnect to the same virtual IP address initially used, however, the cluster will now redirect that connection to an alternate node. This does mean that your prior client database state is lost — for example, if traversing an index in a search loop, your position context is lost at this point and you will need to restart your search operation.

Important

IMPORTANT! Client socket timeouts must be configured to be larger than the servers SYNC_COMMIT_TIMEOUT <time_in_seconds> configured in ctsrvr.cfg or errors occur anytime the secondary server is stopped.

To confirm that an application has actually connected to a new node in the cluster, it can check the replication node ID assigned to that node. For example, when using fencing agents, it is not expected that the original node remains active. It could be undefined behavior should the application connect back to a failed node.

When configured as recommended in the above sections, each node maintains a unique replication identification which is a reliable indicator. Use GetSymbolicNames() with ctNODEID as the mode parameter. Compare the current ID after connection with a prior ID and you will immediately know if your cluster has indeed failed over and your application successfully reconnected to the alternate node.

Some FairCom DB client applications could take advantage of new functions alerting an application when a failover event occurs. This is important because when a failover occurs, the VIP remains unchanged but the cluster switches the internal IP Address from the primary to the secondary server. Applications connected to the primary server at the time of the failover may hang because their connection with the primary database server is no longer invalid.

FairCom DB provides client applications with an option to enable a background thread that listens for a failover alert. The alert comes in the form of a User Datagram Protocol (UDP) communication, which, like TCP, is supported by all network switches. FairCom DB provides the failover.sh script to send UDP messages to database clients. In the Configuring Alerts section, this script is registered with the cluster so that it runs when the cluster starts a failover event.

The failover alert is protected with Transport Layer Security (TLS) to prevent a malicious user from being able to send false failover alerts, which would result in a denial of service attack.

The background thread that listens to alerts is generic and can be used for more than failover detection — for example, external events can trigger alternate client behaviors.

The UDP listening port is currently hardcoded to port 5595.

Function | Description |

|---|---|

| enables the background alert thread |

| returns true when a failover alert has been received |

| resets the failover state to false |

| can now set the global socket timeout |

| retrieves the hostname and IP address of the database server to verify if it is connected to a primary or secondary server |

Enable background failover detection

This function in the FairCom DB API enables background broadcast detection.

Note

ctSetClientLibraryOption() returns SERVER_FAILOVER_ERR (1159) on error.

NINT ctDECL ctSetClientLibraryOption(NINT option, pVOID value);

Options | Description | Limits | ||

|---|---|---|---|---|

| enables and disables broadcast read thread |

| ||

| enables and disables broadcast read thread debug mode |

|

tCLIOPT_BROADCAST_READctSetClientLibraryOption(ctCLIOPT_BROADCAST_READ, "YES");

Check current failover flag

This API call checks your current failover state.

TEXT ctDECL ctGetFailOverState();

ctGetFailOverStateI() returns 1ctGetFailOverStateI() returns 1 if the global variable failoverstate is set.

if (ctGetFailOverState() == 1)

Reset failover state

This API call can reset failoverstate upon successful failover.

ctResetFailOverState()

/var/log/messagesThis log holds details about cluster operations and errors encountered by resource agents.

/opt/faircomdb/data/CTSTATUS.FCSThis log holds details about FairCom DB errors.

/opt/faircomdb/data/ctreplagent.logThis log contains Replication Agent-specific errors and is configured in

ctreplagent.cfg.

Describe resource

sudo pcs resource describe ocf:heartbeat:faircomdb

Output

ocf:heartbeat:faircomdb - FairCo DB resource agent

Resource agent script for FairComDB Database Server

Resource options:

binary: Full path to the FairComDB binary

config: Full path to a FairComDB configuration directory or configuration file

pid: File to read running process PID

user: User name or id FairComDB will run under

group: Group name or id FairComDB will run under

faircomdb_adminpass (required): FairComDB ADMIN password

faircomdb_monitor_user: FairComDB user name to connect for monitoring

faircomdb_monitor_userpass: FairComDB password to connect for monitoring

faircomdb_servername (required): FairComDB server name to connect for

monitoringi

faircomdb_host: FairComDB host name to connect for monitoring

faircomdb_working_directory (required): FairComDB server working directory.

This must be writable for FairComDB to

start and operate

faircomdb_data_directory (required): FairComDB server data directory. This

must be writable for FairComDB to start

and operate

faircomdb_heartbeat_tcpip: FairComDB heartbeat option that forces TCPIP

connections when enabled. Setting false will use a shared

memory heartbeat that will fallback to TCPIP

ctsmon: Path to FairComDB ctsmon monitor utility. Assume located in $PATH if

not provided

repadm: Path to FairComDB Replication Agent Administrator utility. Assume

located in $PATH if not provided

Default operations:

start: interval=0s timeout=30s

stop: interval=0s timeout=30s

monitor: interval=10s timeout=20s

notify: interval=0s timeout=30s

promote: interval=0s timeout=20s

demote: interval=0s timeout=20s

This script completely removes ALL existing cluster configurations and component definitions and creates a fresh cluster configuration from scratch.

Delete and recreate new cluster definitions and remove all old definitions

pcs cluster destroy --all

Define machines in the cluster

pcs cluster setup faircom cluster1.company.com cluster2.company.com

Start the cluster

pcs cluster start --all

Create a config file

pcs cluster cib clcfg

Set up a FairCom DB resource

Options should be configured for your specific system.

pcs -f clcfg resource create faircomdb ocf:heartbeat:faircomdb binary=/opt/faircomdb/server/faircom config=/opt/faircomdb/server/ctsrvr.cfg ctsmon=/opt/faircomdb/tools/ctsmon ctstop=/opt/faircomdb/tools/ctstop faircomdb_adminpass=ADMIN faircomdb_servername=FAIRCOMS faircomdb_working_directory=/opt/faircomdb/server faircomdb_data_directory=/opt/faircomdb/data user=fctech repadm=/opt/faircomdb/tools/repadm pid=/opt/faircomdb/server/faircomdb.pid

faircomdb uses primary/secondary

pcs -f clcfg resource promotable faircomdb master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true

Add a monitor for the primary server

pcs -f clcfg resource op add faircomdb monitor timeout=20 interval=11 role=Master

Set timeouts

pcs -f clcfg resource update faircomdb op promote timeout=120s pcs -f clcfg resource update faircomdb op start timeout=120s pcs -f clcfg resource update faircomdb op stop timeout=120s

Create a Virtual IP (VIP) to connect your application to

pcs -f clcfg resource create VIP ocf:heartbeat:IPaddr2 ip=YOUR_IP cidr_netmask=22 op monitor interval=30

The VIP moves with the master server

pcs -f clcfg constraint colocation add VIP with master faircomdb-clone score=INFINITY

Start the master before the VIP

pcs -f clcfg constraint order faircomdb-clone then start VIP

System specific fencing

This must be customized for each system.

pcs -f clcfg stonith create vmfence fence_vmware_soap pcmk_host_map="cluster1.company.com:RHEL8-Node3;cluster2.company.com:RHEL8-Node4" ipaddr=vmware-host.company.com ssl_insecure=1 login=root passwd=mysecretpassword pcs -f clcfg property set stonith-enabled=true

No quorum for only two nodes

pcs -f clcfg property set no-quorum-policy=ignore

Do not failover during normal operations

pcs -f clcfg resource defaults update resource-stickiness=INFINITY

Failover on first error

pcs -f clcfg resource defaults update migration-threshold=1

Allow failed resource to resume being master after 60 seconds

pcs -f clcfg resource defaults update failure-timeout=60

Apply above changes

pcs cluster cib-push clcfg

Cluster labs

Fencing configuration. See Red Hat documentation Chapter 10 - Configuring fencing.

Location of heartbeat scripts

/usr/lib/ocf/lib/heartbeat/ocf-shellfuncs/etc/ha.d/shellfuncs

Corosync conrfiguration

/etc/corosync/corosync.conf

In FairCom's testing environment, Corosync is configured for a very basic two node cluster. You do not need to create or otherwise edit corosync.conf. This is only provided for completeness. pcs cluster setup will create this file with all needed attributes and all other edits to this file should be made through the pcs utility.

cluster_nameNote

A cluster name is needed, and each node participating in the cluster will need to be defined.

ringX_addrnameNote

A fully qualified domain name is preferred for the node, and when specified, will uniquely identify the node and will be used in later configuration commands.

two_nodeNote

A two-node cluster requires a

quorumtwo_nodeoption to be enabled. This treats two-node clusters as if only one node is required for quorum. Consult the corosync.conf man page for details on available configurations.

Example

Pacemaker provides examples in /etc/corosync/corosync.conf.

totem {

version: 2

cluster_name: my_cluster

transport: knet

crypto_cipher: aes256

crypto_hash: sha256

}

nodelist {

node {

ring0_addr: node1.company.com

name: node1.company.com

nodeid: 1

}

node {

ring0_addr: node2.company.com

name: node2.company.com

nodeid: 2

}

}

quorum {

provider: corosync_votequorum

two_node: 1

}

logging {

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: yes

timestamp: on

}

To minimize disruption to users, database clients need to be notified quickly when the primary database server fails over to the secondary. This makes it easy for database clients to know the difference between connection failure caused by a network glitch or a database failover. When the database client knows a failover has occurred, it can simply reconnect to the VIP, which points to the secondary node after a failover.

FairCom provides an optional script, failover.sh, that uses a UDP datagram broadcast to alert all database clients of a failover event. This is fired from a predefined script which is configured as an alert resource.

The cluster defines alert resources that are fired during cluster events, such as resource logging, notifications, and maintenance tasks. Alert resources are scripts that are registered with pcs to be run when a cluster event occurs.

Run FairCom's failover.sh script when the failover event occurs using the following command.

# pcs alert create id=failover path=/usr/local/bin/failover.sh description="Script to be run at failover"

Example

Example scripts provided by Pacemaker can be found in /usr/share/pacemaker/alerts.

pcs alert create id=failover path=/usr/local/bin/failover.shpath=/var/lib/pacemaker/failover.sh description=”Script to run at failover" ./failover.sh #!/bin/bash ##USEFUL DEBUG## #printf "$CRM_alert_node $CRM_alert_rsc $CRM_alert_task $CRM_alert_kind $CRM_alert_desc $CRM_alert_attribute_name $CRM_alert_attribute_value" | ncat -u 255.255.255.255 5595 #printf "FairCom FailOver Event" | ncat -u 255.255.255.255 5595 ##END OF USEFUL DEBUG## if [[ $CRM_alert_rsc == "VIP" && $CRM_alert_task == "monitor" && $CRM_alert_desc == "Cancelled" ]]; then printf "FairCom FailOver Event" | ncat -u 255.255.255.255 5595 fi if [[ $CRM_alert_task == "node" && $CRM_alert_kind == "lost" ]]; then printf "FairCom FailOver Event" | ncat -u 255.255.255.255 5595 fi

Use

ctdumpto copy the replicated files without stopping thectmtapclient. Please enable the!REPLICATEkeyword for the data files in order to make the restore action create actreplagent.inifile so you can set a new starting point for the replication agent../ctdump -t mark.dmp -c -s FAIRCOMS@vcluster84 -u admin -p ADMIN -o mark.bck

This command produces a dynamic dump (backup) to be received on the client side. Please consider the dump script:

!DUMP mark.bck !FILES mark.idx !REPLICATE mark.dat !END

Next, restore

mark.bckusingctrdmp. This will be executed in a separate directory sincectrdmpwill produce some unused files../ctrdmp mark.dmp

Note

Make sure the restore script, which is slightly different from the backup script, complies with the server

PAGE_SIZEsetting.Now, copy the data and index files to the destination server data directory.

mv mark.dat /opt/faircomdb/data mv mark.idx /opt/faircomdb/data

To set the starting point of the replication agent, you need to copy the produced

ctreplagent.inifile to the server directory. Please note that the file needs to be renamed to match the replication agent name. If you are using the pacemaker sample configuration, the file will be namedctreplagent_AGENT1.ini.mv ctreplagent.ini /opt/faircomdb/server/ctreplagent_AGENT1.ini

Next, clear all the replication logs from the server.

rm /opt/faircomdb/data/REPL*.FCS

Finally, restart the secondary server.

pcs cluster start

At this point, the secondary server is capable of restarting. Once the server is correctly started in the cluster, you may optionally delete

ctreplagent_agent1.ini.rm /opt/faircomdb/server/ctreplagent_AGENT1.ini

Server role file

When the server sets its role to the primary or secondary server after receiving notification of its role from Pacemaker, it writes its current role to the file serverrole.ini in the server’s data directory (which is set using the LOCAL_DIRECTORY server configuration option). This file contains the value 1 for the primary server and 2 for the secondary server. The server uses this file when it starts up to know its role the previous time it ran.