FairCom RTG data is persisted in its “native” (COBOL) format. To share data between COBOL and SQL, the SQL engine performs necessary data conversion between COBOL and SQL data types on-the-fly as fields are accessed. As SQL access is fully read and write, this process must go in both directions. That is, on SQL SELECT native COBOL data must be converted to standard SQL data types for presentation and on SQL INSERT, SQL data types must be converted to persisted data accessible by an existing COBOL application. The XDD schema definition is the source of information about operations performed when bridging SQL and COBOL data.

There are two sides of conversion:

Each is described below.

When converting data from COBOL to SQL there may be situations where the content of the record cannot be interpreted and/or correctly mapped into a SQL data type.

Consider the situation of a PIC 9(3) (a COBOL numeric value) containing three spaces. RTG conversion does not know what these spaces represent or how to interpret it. It cannot tell if the content is garbage or intentionally defined.

Another very common situation is date formats, where it happens that although the field content is clear it does not map into a valid SQL value DATE type. For example, a PIC 9(10) mapped into a date value may have its value set to 0, which is a good numeric value, but not a valid FairCom DB date!

A third common scenario that there are different COBOL conventions for how to represent persisted numeric data in the file. In particular, COBOL dialects have differing default conventions for how signed numeric sign values are encoded. The SQL engine must know which convention is in use and the data on disk must adhere to that convention, otherwise a conversion error occurs.

The XDD schema mapping contains information on how to handle conversion errors at the “schema” level (for an entire table) or at “field” level (for a single field) by setting the “onConvertError” attribute.

onConvertError

When a conversion error occurs the engine determines what to do based on the onConvertError setting for that specific field. If the onConvertError for the field missing, it uses the one set on the schema or, when that is missing, it uses the default. The possible behaviors are:

Automatically generated XDD schema mappings do not contain any onConversionError settings and do not contain any default values and application administrator intervention is required, at least initially, to properly configure. Once properly configured and verified that conversion errors are properly handled as desired, it is possible to automate the XDD modification using XDD rules. See Define External Rules.

When converting from SQL to COBOL there are situations where determining what the COBOL record buffer should contain is difficult if not impossible. Typical situations:

Null Values

In SQL it is possible to set a field flag to “NULL” indicating “missing information or inapplicable information” or “lack of a value.” The same concept does not exist outside SQL (for example, in COBOL ISAM files) where any field has a value. Usually applications have a specific sentinel value for "missing" field data. When a field is set to NULL by SQL, RTG conversion logic needs to know what value to store in the field. In COBOL there is no standard discipline for “uninitialized fields” (for example, in a table of “people,” the date of death is unknown while the person is alive). The field may contain a high-value, a low-value, spaces, zeros or even garbage; every application has its own discipline, which may be different on different fields.

To address this issue, the XDD file allows you to specify a “bindefault” or a “cbdefault” attribute for the field, which will be written to disk in place of a SQL NULL. Essentially, “bindefault” is a binary value to replace a SQL NULL and “cbdefault” is a COBOL value to replace a SQL NULL (see <field> schema element).

In this second case, it is worth noticing that if the default value set in the XDD is a valid value for the field type (e.g., 0 for numeric types) when reading the record, it will be converted into that value in SQL and not NULL as it was set. If the value is not a valid value (e.g., spaces for numeric types), a conversion error will occur when reading the record but the engine automatically handles it (unless the XDD specifies otherwise, as explained later) and exposes the value as NULL in SQL, as expected.

Hidden Fields

An XDD mapping may specify some fields are hidden to SQL, which is to say SQL is not aware of them. This occurs for “filler” fields or for redefines where a record portion is not redefined or simply because the person who generated the XDD decided not to expose some field (and the information it contains) to SQL.

When updating an existing record, hidden fields are not a problem, because when performing an update, the engine will convert and store only fields that are set by the update; the portion of the record belonging to hidden fields is left untouched.

When inserting a new record, hidden fields are a problem: SQL does not pass any value to store in these fields, because the SQL engine does not know they exist. However, the portion of the record belonging to these fields must be set. The engine sets it to the “default” value specified in the XDD for the specific hidden field which is consistent with the approach taken for NULL values.

However, if the default value is not specified you may encounter error -21126 (SQL) or possibly 4126, CTDBRET_CALLBACK_18 (Missing default for null field).

FairCom DB SQL Explorer will display the above SQL error if you attempt to insert a record into a table that has hidden fields without default values set in the rules file. To prevent this, add the following to your rules file:

<rule sequence="1002">

<when>

<field hidden="true"/>

</when>

<do>

<add>

<field cbdefault="0" onConvertError="null"/>

</add>

</do>

</rule>

Unsigned Fields

Unsigned COBOL types are mapped into SQL types, which are signed. SQL itself does not check for negative values, which are not acceptable values for the COBOL data types. If the XDD has defined a field as unsigned, attempting to set it to a negative value causes a CTDBRET_UNDERFLOW error, which is a common situation out-of-the-box.

Inline Redefines

It is possible for the XDD file to specify that the redefines are “expanded” as further fields in the same table instead of creating a separate SQL table. In this case, attempting to add a new record (i.e., INSERT INTO) fails with error CTDBRET_NOTYET (Not yet implemented).

The fact that the XDD initially does not contain any error handling information immediately exposes data conversion errors to a SQL user. This provides the way to begin troubleshooting data conversion errors and to identify the proper settings to specify in your XDD file.

Visually Check Data with FairCom DB SQL Explorer

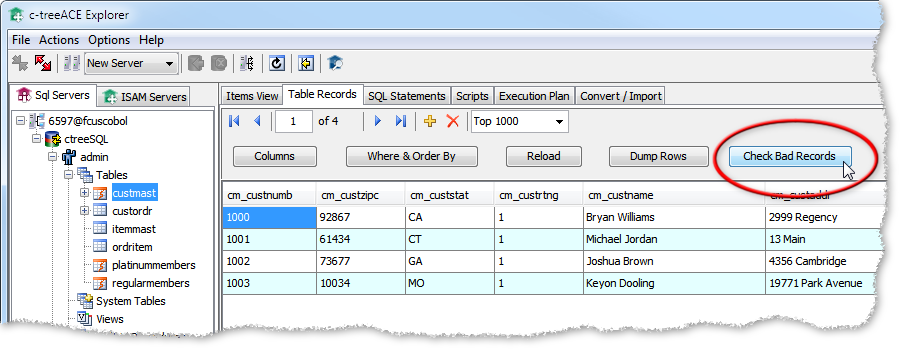

FairCom DB SQL Explorer and FairCom DB Explorer include a button to simplify identifying "bad" records.

To check for bad records in FairCom DB SQL Explorer or FairCom DB Explorer:

Check using a SQL Query

You can use the procedures in this section to identify data-conversion errors and use that information to fine-tune your XDD files.

If a query fails, it is possible the failure is due to a problem with SQL data conversion. Troubleshooting this type of error is quite easy with the following steps:

SELECT * FROM <table>

If none of the queries fail, the original query failure is not due to a conversion problem.

SELECT * FROM <table> ctoption(badrec)

ctoption(badrec) is a FairCom DB extension to SQL indicating the query should return only records having conversion errors and expose values that do not properly convert as NULL.

CTSQLCBK.FCS Log

The ctoption(badrec) command generates a log file, ctsqlcbk.fcs, in the FairCom DB SQL Server directory that can be used to determine the exact conversion error and the data causing it. This file lists all the fields that caused a conversion error exposed in SQL along with the value of the data that could not be converted. Note that the log contains information for the fields that result in propagating the conversion error to SQL. It does not log conversion errors that result in a SQL value because they were already handled successfully following the settings in the XDD.

Each log entry is made of three lines:

Convert error XXXX on field[YYYY]

Where XXXX is the error code indicating the cause of the conversion error, YYYY is the field on which the conversion error occurred.

For example:

Convert error 4028 on field[FIELD1]

{ctdbStringFormatToDateTime} on 0000000000 failed

00000000

Convert error 4028 on field[FIELD2]

{ctdbStringFormatToDate} on 00000000 failed

3030303030303030

Convert error 4118 on field[FIELD3]

[NormalizeCobolStr] ascii value is not a digit

2020202020202020

Convert error 4118 on field[FIELD4]

[NormalizeCobolStr] ascii value is not a digit

2020202020202020