The use of write barriers and commit delay has an impact on data safety and performance.

Write barriers enforce the orderly flushing of caches to disk by ensuring that all requests issued at levels above the barrier must be satisfied before continuing. Write barriers affect performance because they require the write to be complete before the write call continues. Asynchronous (cached) vs. synchronous (flushed) writes are examined in the section titled Write Barriers.

Tests were conducted to determine the performance impact of commit delay and disk write cache. The results of these tests and configuration suggestions are presented in the section titled Commit Delay and Disk Write Cache.

Linux file systems offer several levels of caching, including the user process, file system, transport layer, storage controller, and the hard disk drive (HDD), to name just a few. Think of these layers of caching as a stack with the application at the top and the physical media to which the data is written (the hard disk platter) at the bottom.

When a disk has write cache enabled and the system loses power, the contents of the disk write cache are lost. This means that file data and file system metadata that the application or the file system believed to have been written to disk might not have made it to disk. It is also possible for the data and metadata to have been written to disk in a partial or out-of-order manner, leading to inconsistencies which can cause problems for the file system.

The "write barrier" (or simply "barrier") enforces orderly flushing of these caches to disk. Essentially, the barrier ensures that all requests issued at levels above the barrier must be satisfied before continuing. Write barriers guard against data loss and inconsistency of file system metadata

The barrier affects performance because it requires the write to be complete before the write call continues.

In the default mode, the barrier ensures the data and metadata are written to the File System cache before the write call returns. In O_SYNC mode, the data and metadata must be written to the HDD cache before the write call returns. The diagram below shows this difference:

Typical default for an asynchronous (cached) write |

|

O_SYNC (flushed) write used for critical writes for transaction-controlled files |

Application |

|

Application |

|

|

|

File System |

|

File System |

- - - BARRIER - - - |

|

- - - BARRIER - - - |

HDD Cache |

|

HDD Cache |

|

|

|

Disk Platter |

|

Disk Platter |

Asynchronous (cached) vs. synchronous (flushed) writes. Notice that for O_SYNC writes, the call does not return until the data has been written to the HDD cache.

The bug in the Linux kernel mentioned earlier was preventing O_SYNC mode from taking effect, which meant that data was not written to disk before the call returned. This resulted in unexpectedly high performance at the expense of data recoverability in the case of a power loss.

It should be safe to disable write barriers (using barrier=0 in /etc/fstab or using the -o nobarrier option for mount) in either of the following situations:

• if disk write cache is disabled; or

• if disk write cache is enabled and the disk has a properly configured battery backup or uninterruptable power supply (UPS).

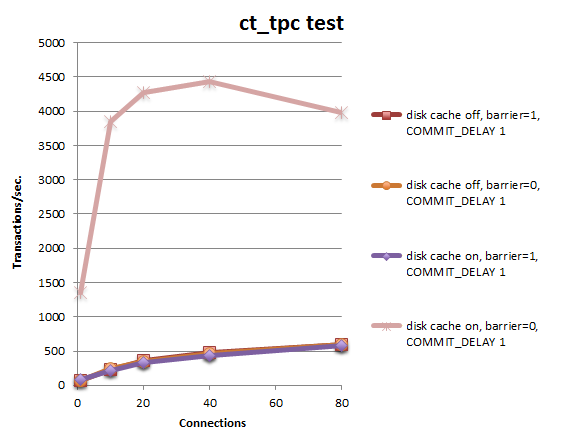

The effects of commit delay and disk write cache on FairCom DB Server performance were tested. All tests used the following options:

COMPATIBILITY LOG_WRITETHRU

COMPATIBILITY TINDEX_WRITETHRU

The test results are as follows (tps = transactions per second; larger numbers are better):

ct_tpc test |

With fcntl() bug fix |

|||||||

ext4 file system, data=ordered |

Avg. tps for 20 sec. with # clients: |

|||||||

|

Disk Write Cache |

Barrier |

COMMIT |

1 |

10 |

20 |

40 |

80 |

|

off |

1 |

1 |

76 |

236 |

358 |

483 |

600 |

|

off |

0 |

1 |

76 |

248 |

349 |

463 |

592 |

|

on |

1 |

1 |

79 |

220 |

332 |

434 |

586 |

|

on |

0* |

1 |

1358 |

3848 |

4270 |

4435 |

3980 |

* Battery backup or uninterruptable power supply (UPS) required for data integrity.

The results shown above highlight the significant cases from a battery of tests run at different settings.

The following conclusions were drawn based on these tests:

Some options, such as putting an uninterruptable power supply (UPS) on a system, improve recoverability of data without impacting performance.

If you rely on a UPS, be sure to configure it to cleanly bring the system down before the battery is exhausted! Note that the FairCom DB Server will generally come down cleanly if it receives a shutdown signal from the operating system. However, we strongly recommend testing this operation on your system!

The best performance can be achieved by shutting off the barrier, which requires a battery backup or UPS to ensure data integrity.

The highest transaction rates are seen with the following configuration:

Best Practices

The best configuration for your system depends on many factors. There is no substitute for performing tests to determine the best settings for your environment.

Checklist

In evaluating your configuration, consider the questions in this checklist: