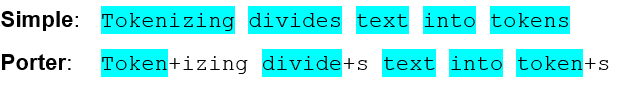

Internally, FairCom Full-Text Search uses a "tokenizer" to divide text into "tokens," which are roughly equivalent to a list of categorized words. A tokenizer follows a set of rules for extracting tokens (usually single words) from a text string or search query. Several algorithms can be used to tokenize text. The simplest uses white space to determine the boundaries between words. More advanced algorithms can be used when necessary.

FairCom Full-Text Search provides built-in support for several tokenizers:

Type |

Algorithm |

Recommended Usage |

|---|---|---|

Simple |

Essentially uses white space and punctuation to delimit tokens. Not case-sensitive. |

This is the default. Allows for quick and easy searching. |

Porter |

Uses "stemming" to reduce words to a common root to allow grammatically similar words to be matched. For example, "searching" and "searched" have the same stem, "search." |

The Porter tokenized creates more compact indices by compacting words to their simplest form. This type of stemming can return false positive results. |

ICU |

Allow international support following Unicode rules for handling supported languages. Case-sensitive (depending on configuration). |

When Unicode support is required, this is the recommended tokenizer. |

Custom |

Allows FairCom customers to develop their own tokenizers for special requirements. |

You can create a custom tokenizer if you have special requirements. Sample code is provided to get you started. |

See also: